Deriving HRTFs and the AES69-2015 File Format

Head-related transfer functions (HRTF) —which I covered in my last column— are essential to creating a realistic immersive (or 3D) audio experience over headphones. HRTFs can be determined in a variety of ways, with varying degrees of accuracy.

The best way, albeit not the most practical, is to have your own ears measured. Research labs around the world have been measuring individuals as they study the effects of different transfer functions on binaural listening.

HRTFs are typically measured in anechoic chambers. If no anechoic chamber is available, semi-anechoic chambers or non-anechoic rooms can be used if the direct sound can be separated from the first reflections and late reverberation by applying proper windows in the time domain.

“The window size determines the frequency resolution of the measured HRTF and thus requires that the first reflection arrives with sufficiently enough delay after the direct sound,” said Markus Noisternig of the Institut de Recherche et Coordination Acoustique/Musique, or “IRCAM,” a Paris-based research institute for music and sound.

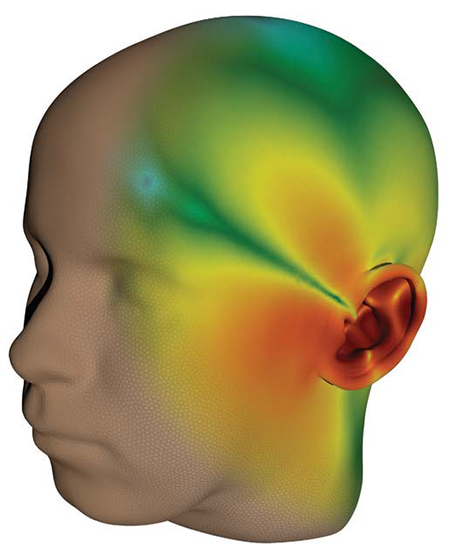

Boundary element rendering of the sound pressure field on the scan of a human head. Measurements are always taken in the time domain, according to Noisternig. Microphones are typically placed at the entrance of the blocked ear canal, which requires special ear molds. As 3D audio perception requires full spectral content from 20 Hz to 16 kHz, most HRTF measurement systems apply exponential swept sine signals, as they allow for separating non-linearities.

If the measured data is represented in the time domain, it is called a head-related impulse response (HRIR), while the Fourier transform of the HRIR produces the frequency domain HRTF. For signal processing, it’s easier most of the time to do the mathematical convolution filtering computations in the frequency domain, although there are some efficient time domain filters that are sometimes used.

BOUNDARY ELEMENT MODELING

A second approach to obtaining HRTFs is to scan a head with a camera or an infrared device and then process that information (mesh grid of the head and pinna) using boundary element modelling (BEM) to obtain an HRTF for that person.

BEM is a very computationally demanding process. “Computations could take a half a day on a powerful computer or less on a computer cluster,” Noisternig said. “There would be the option to upload the mesh-grid to a server that processes the data on a huge cluster and sends back the rendering results. Anyway, we are very far from doing this on a smartphone.”

But mobile devices can be used for the third method of determining, or in this case, estimating a person’s HRTF. While it is the least accurate of the three approaches, it can provide quicker results that can be very satisfactory.

“The only accessible individualization method on mobile devices is to find the best match with HRTFs from huge databases,” Noisternig said. This method uses a best-fit model that doesn’t involve scanning the head, but rather uses some biometric data that can easily be measured, like head radius or the distance between the ears.

This information is sent to HRTF databases, which propose possible HRTF matches to the listener, along with a test recording that contains localization information. It is expected that there will be some trial and error, as the listener selects the best match. If an HRTF isn’t closely matched, then the full auditory immersion and localization experience won’t seem real. Sound may appear coming from inside the head or cannot be precisely localized.

Having a common file format for all HRTF data, no matter the source, would be a great advantage, since early on there wasn’t.

“The problem was that there was very little interoperability between the formats,” said Piotr Majdak with Acoustics Research Institute, Austrian Academy of Sciences in Vienna. “Imagine an algorithm processing HRTFs. That algorithm would need to deal with all the various formats, which would make it heavy and susceptible to bugs.”

Thus began the work that would eventually evolve into the Audio Engineering Society standard AES69-2015 “AES standard for file exchange—Spatial acoustic data file format.”

In 2012, AABBA (Aural Assessment by Means of Binaural Algorithms), a group of scientists working on binaural hearing and audio, “realized quite soon that there needed to be a new format, and we needed to put much effort in its extendability in order to prevent the need for another format quite soon,” Majdak said. “With these aims, we started to develop a format called ‘spatially oriented format for acoustics’ [SOFA].”

STANDARDIZATION THROUGH AES

Around this same time, the BiLi (binaural listing) project in France was getting underway. “The BiLi project aims to develop methods and tools to capture HRTFs for scientists, professionals, but also for anyone, [on] PC, mobile, HiFi, professional devices, to render binaural listening with a personalized process,” said Matthieu Parmentier with France TV.

As this project also needed an interchange format to store HRTFs within listening devices, according to Parmentier, standardization through the AES began. “Since SOFA was the most developed format, they decided to support SOFA,” Majdak said. Since the fall of 2012, “SOFA and AES pulled together,” and by early 2015, the standard was published.

The data stored according to AES69— with the file extension .sofa—is self-described, according to Majdak, which means there are many narrative descriptions in the file, and yet the file is data-compressed (zipped) for efficient network transport, and readable on any platform. “SOFA does not store audio content like WAV or MP3 does,” Majdak said. “SOFA describes the filters only.”

AES69 based on SOFA relies on a well-established numerical container, and is open toward future development. “SOFA uses so-called conventions, which describe how the data are organized within SOFA,” Majdak said. “The standard defines SOFA as a framework for the conventions, and conventions for description of specific data. With such a concept we can describe the currently available data like HRTFs in conventions, already part of the standard, and also data available in the future, like multichannel measurements from microphone arrays or loudspeaker arrays.” Any further conventions developed can be later standardized as annexes to AES69.

Applications of AES69 are many.

“HRTFs measured for various directions can be stored in SOFA,” Majdak said. “Then, SOFA files containing HRTFs can be used to create virtual loudspeakers for reproduction of spatial audio via headphones. But, as SOFA can describe nearly any spatially oriented data, SOFA is not limited to HRTFs.”

An example of the latter is a project that Noisternig has been involved with. “We are using AES69/SOFA for storing spatiotemporal room impulse responses measured with spherical loudspeaker arrays and spherical microphone arrays,” he said. “With this data we can provide more realistic auralization of concert halls and a more profound analysis of the room acoustics.”

PERSONALIZATION FOR THE END USER

AES69, as a single standard simplifying data exchange, allows players with smartphones, tablets and Web applications to easily run their binaural processes with different sets of settings to know and better approximate the end user’s HRTFs, according to Parmentier.

From a broadcaster’s point of view, “the personalization goal embraces accessibility services for hard-of-hearing people, and even people in perfect health who are concerned in various situations, for example when listening in transportation, and more specifically, to bring a realistic immersive audio process over headphones,” Parmentier said.

Researchers can use “SOFA repositories for the development and evaluation of algorithms processing HRTFs or for psychoacoustic studies by using tons of HRTFs available as SOFA files,” Majdak said. “Application developers can implement spatial audio algorithms in their applications and use SOFA files for the rendering of virtual loudspeakers. Users of audio software can use SOFA files for the personalization of audio reproduction.”

The group that developed AES69 “also worked with AES members involved in the MPEG-H 3D audio codec to allow an end-device architecture to accept AES69 (SOFA) files within the binaural processor function, in case the user plugs in headphones while playing multichannel content,” Parmentier said. “AES was the perfect place to meet between high-level scientists, software/hardware engineers, codec manufacturers, sound engineers from the field, post studios and broadcasters.”

Mary C. Gruszka is a systems design engineer, project manager, consultant and writer based in the New York metro area. She can be reached via TV Technology.

Get the TV Tech Newsletter

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.