Digital audio processors

Signal processing for analog broadcast is a combination of two functions: control of perceived sound (for competitive advantage), and meeting FCC constraints on transmission modulation and occupied spectrum. With analog modulation, any processing applied to the audio has a direct effect on the RF signal, so the baseband and RF are closely intertwined.

With digital transmission, however, the two realms are essentially decoupled, but new interdependencies have emerged. Surround channels, multiple programs and repurposing all add another layer of complexity.

While analog inputs and outputs are typically found on digital processing equipment, minimizing the number of analog-to-digital conversions (and vice versa) will improve your overall signal quality. It will also reduce the potential for level mismatching. The same is true for other processors — equalizers, presence enhancers, de-essers and the like.

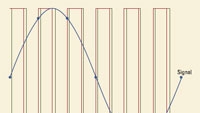

In addition, all digital signals are subject to clock jitter when performing A/D and D/A conversions. This is because all clocking signals have a certain amount of noise on them. If a signal is sampled or reconstructed with a noisy clock, then the sampling instants will be modulated in time by a filtered version of this noise signal. (See Figure 1.) This filtering is a characteristic of the clocking circuits employed in the various converters. The result is an increase in the noise floor of the signal, degrading its quality.

Dynamics control

Audio level compression (as differentiated from bit rate compression) has been a contentious topic since the days of Top 40 radio. Aggressive compression was originally intended to be a competitive tool to provide a louder sound, with commensurate higher modulation density and therefore higher perceived signal-to-noise ratio (SNR) — at least for AM radio. With the crossover to FM, overmodulation did not cause distortion, but ran the risk of violating FCC regulations.

With TV sound, broadcasters tended to relax the compression, because the video and program content were the primary competitive drivers. Nonetheless, intentionally disparate processing continues to be controversial when it comes to commercial loudness. A sample compression characteristic is shown in Figure 2.

Early work at CBS Labs and elsewhere established various metrics for measuring program loudness. These algorithms have since been incorporated into several broadcast processors, and operators now have the capability of better control over program-to-program level consistency.

However, the ability to control a signal does not mean that setup is straightforward. Access to too many parameters, such as target level, minimum and maximum gain, and rate of gain change, may give the operator fits when attempting to install a unit — especially if the unit is solely controlled through software. To save yourself the trouble, look for presets for these functions, and see if they pass your own (or your golden listener's) quality judgment.

Peak limiting has also been used in analog broadcasting both for regulatory and competitive purposes. With digital processing, it's important to ensure that analog input and output levels, along with any digital level changes, all maintain the signal within its normal operating range. Too low a signal will degrade the SNR, while too high a signal will run the risk of clipping.

Surround sound

Surround sound is a topic all to itself, but there are differences between true surround, artificial surround and spatial enhancement.

True 5.1 multichannel sound consists of five channels plus low-frequency effects (LFE), all with perfect separation between them. A signal sent on one channel will have virtually no crosstalk to the other channels.

Artificial surround creates a multichannel experience by matrixing surround information onto a stereo pair, then decoding this at the receiver and creating additional surround channels. This type of surround has limited separation between the main and surround channels, sometimes as low as 3dB.

Spatial enhancement creates an ambiance effect by enhancing or delaying the difference component of the left and right signals.

Bit rate compression

Before digital transmission, all audio signals undergo compression. While the amount of compression is left up to the operator, the small amount of bandwidth savings that might be enjoyed at the lowest bit rates is not worth the corresponding decrease in quality.

An increasing amount of material, in addition, is already archived or sourced in a compressed format. While it may be tempting to avoid complexity by decoding these signals — even down to analog — each additional conversion degrades the quality of the signal. This is especially true for cascaded compression operations, where compression artifacts can become exaggerated.

For this reason, manufacturers now make equipment that can intelligently transcode signals, using knowledge of preceding compression operations.

Moving video signals around a plant often involves SDI interfaces, and these can be used to transport audio as well. SDI audio embedders and de-embedders perform this function. Usually, two stereo pairs can be carried this way, using AES3 linear (uncompressed) digital audio. Compressed audio can also be carried by encapsulating Dolby-E or AC-3 signals within the AES3 stream, for example.

Time compression and expansion

There are various ways to change the timing of audio programs. In order to maintain lip sync, of course, any change in the duration of a program must be performed together with the associated video.

Time base adjustment is sometimes performed by a processor that uses a gate function to detect and modify interword separation. Such processing is limited to speech only and has a high SNR.

Sophisticated processing will implement a form of Fourier transform in order to change the time duration without affecting the pitch of the audio. Since this transform must be finite in length, there is a splicing rate and overlap that must be optimized for the signal. Ordinarily, the user will not have access to these or other parameters of the transform, but check your product to see if you do.

As always, some experimentation with different equipment setups may be needed to get your desired results. Keep in mind that this will be dependent on the program material. Unless you're comfortable with these changes, it's best to leave it to a default or preset condition. However, make a long-term plan to continually monitor your audio in a representative viewer/listener environment. You'd be surprised what sneaks through for long periods of time without operator adjustment.

Aldo Cugnini is a consultant in the digital television industry.

Send questions and comments to:aldo.cugnini@penton.com

Get the TV Tech Newsletter

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.