Discovering the Importance of Storage Analytics

Since storage has become a key component in nearly all media systems and workflows, one might wonder if “storage analytics”—the detailed information about individual and overall storage elements—are important to operations and management of the entire media production ecosystem.

Utilizing and understanding storage analytics is becoming more important as users configure virtual environments (such as VMware or Hyper-V) as well as conventional structured storage systems. When virtualized computer environments take shape across the enterprise, shared storage can also take on a much broader dimension. While multiple operating systems can operate from a single physical computer, how the information (data) generated in those environments is stored or managed becomes more difficult. Complex multi-element environments are never as easy to understand or manage compared to how a single OS, computer or server platform addresses a small NAS or group of drives.

Virtual machine environments and virtualization fabrics are subjects which have much depth, vary by manufacturer, and—for now—are beyond the scope of this month’s article. However, whether your system is VM-based or a mid-to-modest scale NAS or SAN, the value proposition in having analytical tool sets available that can manage the overall storage system is becoming a subject of interest to many IT managers and production workflow administrators.

If you’re fortunate enough to have a greenfield system with a full complement of new “raw” storage, then the options to configure and deploy this untapped storage pool are many. Yet, if your storage pool is a collection of many different sets of storage (as with many facilities) that are accumulated over various time periods, the efficient management of those storage “islands” takes on another dimension altogether. Often such systems may be comprised of DAS, NAS or SAN elements and will have little consistency in terms of volume sizes, I/O capabilities and distribution.

PERFECT CANDIDATE

The later (likely more typical) configuration is a perfect candidate for both storage virtualization and for a toolset capable of looking at the demands and stresses put onto the storage system. This process that uses these toolsets, in more modern terms, is known as “storage analytics.”

With the popularity continuing to grow in cloud technologies, a quick Google search for storage analytics will yield multiple sets of products provided by major cloud providers and by enterprise class storage vendors. Most products or services are prefaced by the cloud provider’s name (e.g., Azure) followed by the marketing term for the analytical toolset.

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

Some of the storage vendors will wrap the sentence to include VMware, Big Data, all Flash, scalable or some other storage marketing buzz word. The plethora of terms sometimes breeds confusion as to what you’re getting and how well it really does its job.

So, what’s under the hood? Why are storage analytics important? And what do they do or provide? How do they benefit the user?

STORAGE SYSTEM INSIGHT

Storage analytics are intended to provide insight into the physical and/or the virtual storage environment. Its tools should allow the user/administrator to optimize the storage system regardless of the complexity or the distribution. Additionally, there is also a business-sense component defined as “improving business performance” and “reducing costs.”

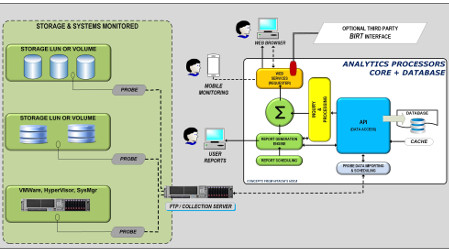

Fig. 1

A storage analysis tool set is designed to complement (i.e., improve upon) the traditional storage vendor-provided management systems. Analytic tools can help discover unclaimed usable storage across all the LUNs or volumes in a storage system, potentially providing significant cost-savings to any scale organization. When unclaimed storage is repurposed for other functions, users in turn may avoid having to increase storage capacity or might actually find performance is increased based upon what that orphaned storage is repurposed to do.

Fig. 1 illustrates an end-to-end data/storage analytics system, which consists of multiple probes within the storage and virtualization hardware (diagram’s left side) which collect the data via an FTP (or similar) server. The “Analytics Processor” core and database system (diagram’s right side) is the software-based solution that schedules and generates user reports based upon sampled information collected from the probed devices. The various processors in the core then create the trending, alarming and other data—reporting it to the Users’ fixed or mobile devices via web-based interfaces (concepts courtesy of Hitachi Data Systems’ data center monitoring solution). An optional BIRT interface (business intelligence report tool) may also be added, if desired.

VELOCITY, VARIETY AND CHURN

Trending analysis is another important tool found in most storage analytic toolsets. Key factors that may be helpful include the capability to look at data churn; that is, the “frequency” of change or the “retention time” of the data—information that can be used to adjust hierarchical storage management (HSM) policies or which application uses which data pool sector. As for the “velocity” of the data, is the system I/O optimized for the storage solution? Are there bottlenecks that hinder data transfers from one LUN (array or volume)?

Also in the toolset is a view into the “variety” of data—i.e., how much of the data is metadata, how much is full-resolution images (video, for example) and how much is proxy resolution (stills, short clips, thumbnails). Since storage should often be optimized for the type of data (small chunks, modest blocks or gigantic contiguous streams of files such as for 4K images), it is helpful to understand where those kinds of data are being placed, especially when you’re merging smaller volumes onto a larger storage pool.

TRENDING AND PERFORMANCE

Performance statistics and reporting are vital components in any suite of analytic tools. Many storage vendor tools provide basic, ad hoc reporting on storage performance. Few provide detailed or in-depth analysis with different levels of user-configurable granularity. The amount of detail available (i.e., the granularity of the information) should be adjustable based upon the system needs or the purpose of the investigation. The statistics used to generate the reports will then meld into the trending analysis programs, so the term of the trend and performance reporting needs to span multiple years.

At some point, storage will need to be either replaced or upgraded (expanded). Trending tools help predict where that point is so that the organization is not caught having to invest in more storage at an inappropriate time. The data points may also help determine when a cloud storage application is appropriate and to what volume (capacity) is proper.

BUSINESS INTELLIGENCEGATHERING

Ancillary products—those generally found useful in business intelligence reporting—are now being adopted throughout data centers and enterprise-wide large storage systems. One example, called Business Intelligence and Reporting Tools (BIRT), was developed as an open source software project aimed at providing reporting and intelligence via web applications created for rich client platforms (RCP). It integrates well with existing Java-based reporting applications. BIRT can be found integrated into some vendors’ storage analytical tool sets and by those who provide data center class storage as well as media-focused storage solutions. For storage analysis applications, BIRT is especially valuable if the organization is already employing BIRT methodologies throughout its other business units.

Intelligent analysis of your storage solution sets is valuable at any stage of the storage life cycle. At configuration, the tools help you analyze and optimize actual performance. At mid-term of the life cycle, the tools will provide benchmarks for peak performance, which can be used to validate or justify the storage solutions’ continued use or tweak its applications for improved optimization (should workflows change or be added).

Throughout the many storage work cycles, these tools help deliver consistent and concurrent reporting on the storage system’s status. And finally, the tools help to solve the most difficult of performance issues in a timely and convenient manner.

When selecting a storage solution for your organization, ask the vendor about the storage analytics tools available from the provider; then ask which third-party solutions they recommend or know have been added to their systems by other end users. The use of these storage analytic tools, from the start, may make a difference to your storage life cycle management two or more years down the road, and can be used to leverage change or improvements enterprise-wide.

Karl Paulsen is a SMPTE Fellow and chief technology officer at Diversified. For more about this and other storage topics, read his book “Moving Media Storage Technologies.” Contact Karl at kpaulsen@diversifiedus.com.

Karl Paulsen recently retired as a CTO and has regularly contributed to TV Tech on topics related to media, networking, workflow, cloud and systemization for the media and entertainment industry. He is a SMPTE Fellow with more than 50 years of engineering and managerial experience in commercial TV and radio broadcasting. For over 25 years he has written on featured topics in TV Tech magazine—penning the magazine’s “Storage and Media Technologies” and “Cloudspotter’s Journal” columns.