Elements of a Software-Defined Network

Over the course of the past decade we’ve seen a proliferation of servers, often as commodity-based products from the usual sources, being added to facilities for nearly every system in the video production equipment room or the delivery network.

Servers—in a general sense—have steadily become the defacto device that provides the operational engine to much of the functionality seen in the modern digital media age.

From an implementation perspective, design practices have layered consecutive sets of hardware (e.g., servers and storage) to the mix of already a dozen to upwards of hundreds of other servers, each with a purpose aimed to support the next set of software applications or implementations.

Historically, each server would impart its own dedicated purpose within the workflow. Sometimes you would find the same functionality across different servers; see them in augmented segments of ingest, post production or news; and now we’re seeing them utilized extensively throughout the entire delivery chain.

With each device comes a primary connection and configuration to a network. Sometimes, depending upon the facility’s directives or budget, another secondary network interface to another network section would be added for protection or overall system resiliency. This continued duplicity and individuality of servers and fixed network topologies is costly and cumbersome to manage.

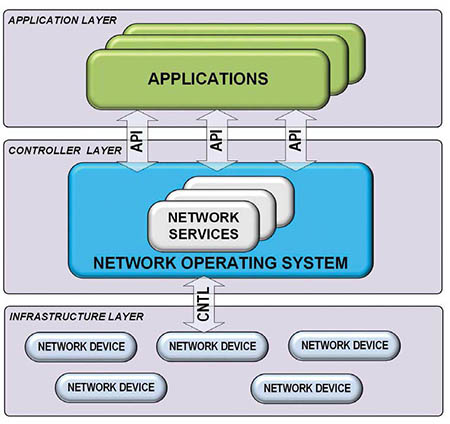

Fig. 1: Software-defined network (SDN) reference architecturePROFESSIONAL MEDIA NETWORKS

Virtualization is changing this legacy model. When similar functions can be distributed amongst a pool of servers whose functions can be modified to serve other purposes once a work task is completed, the efficiency of the system improves and the CapEx and OpEx costs are reduced. Virtualization, among other factors, is aimed at reducing the hardware components in a facility without reducing performance or other workflow support. Yet there is another evolving concept that has grown out of the compute (IT) industry and is filtering its way into professional media networks (PMN) for file-based and real-time video over IP.

This month’s introduction to SDN follows up from my April 2016 column on data- defined storage. In the IT industry, where entirely software-based systems are rapidly becoming the norm, the organizations that wish to accelerate application deployment and delivery—while dramatically reducing their overall IT costs—are transitioning to what is called “software defined networking” or SDN. Essentially, an SDN is policy-enabled workflows built on the principles of automation. In cloud-based systems, SDN technology is the catalyst that enables the cloud architectures, which provide for automated, on-demand application delivery, portability and mobility at scale.

DATA CENTERS AS CLOUDS

In a data center, the economies of scale are achieved in part through virtualization—that is, the sharing of like component to provide services as requested by the enabling software- based control and management systems. Referred to as “data center virtualization,” this functionality leverages SDN to provide for increased resource utilization and flexibility while in turn reducing the total costs for operations, including infrastructure costs and overhead.

Data centers can be considered clouds that may be private (i.e., services provided for internal organizations); public (i.e., any-services [XaaS] provided to others for a fee); or hybrid (where excess capabilities built for private services are sold publicly to entities outside of the internal organization). Regardless of the scale of the cloud data center, they all function primarily the same.

Typical cloud computing infrastructures— large sets of identical or very similar servers—live on large blobs (Binary Large OBjects), which form the common server structures. The blob is a collection of binary data stored as a single entity in a database management system. Blobs are usually composed of images, audio or other multimedia objects. Binary executable code may also be stored as a blob.

In a data center/cloud environment, myriad servers evolve much faster than in a traditional IT infrastructure built for specific functionality (such as for compute, back office or the like). Thus, blobs are not homologues (i.e., they may not be corresponding or similar in position, value, structure or function) to one another. In this scenario, server groups are fundamentally identical. For example, they are x64 architecture-based and they all share similar features, such as the peripheral component interconnect express (PCIe), serial advanced technology attachment (SATA) and Ethernet interconnects, which are essentially structured roughly the same way over repetitive subsystems.

This gives the data center/cloud the capability to scale—the capability to grow quickly without having to continually modify the physical (or software) infrastructures to “scale-up” to meet demands.

The management component for the orchestration of these capabilities is built on software-defined solutions including network components and virtual machines.

WORKFLOW DEFINED

An SDN allows systems to develop and adapt to varying workflows—that series of activities that are necessary to complete a task. Workflows are usually comprised of a series of stages or steps, each with a distinctive step before it (except for the first step), and are followed by another step after it.

Workflow steps are usually linear, but may also include looped steps with decision trees that allow the sequence to exit the loop and continue to the next step (or workflow) upon meeting certain conditions.

Workflows can be complicated and should be documented so they can be understood by external (human) sources or meshed with computer software built on a human-readable coding structure such as XML (eXtensible Markup Language). The written documentation is based on business processes modeled using principles found in business process management (BPM).

HYPER-CONVERGENCE AND ORCHESTRATION PLATFORMS

For a cloud or cloud-like implementation, the programming is called the “cloud orchestrator.” This platform manages the interconnections and interactions among cloud-based and on-premises business units. Orchestration may be applied to one or many sets of servers, but its best use is when applied to a system of servers, which, by themselves, have almost no understanding of what the adjacent server is to accomplish now or in the future.

Business objectives (e.g., a global ingest platform that brings content into a central repository) drive how the orchestration works and how workflows are developed (Fig. 1). SDN accomplishes these objectives by converging the management of network and application services into centralized, extensible orchestration platforms that automate provisioning and configuration of the complete infrastructure. Centralized policies collectively bring together disparate groups and workflows so they can deliver new applications and services in minutes, rather than the days or weeks required in legacy systems.

Depending upon its scale, this concept may also be known by the term “hyper-convergence”— a form of infrastructure with a software-centric architecture that tightly integrates storage, networking and virtualization resources (alongside other technologies) in a commodity hardware-based system; usually managed under some form of SDN.

SPEED, AGILITY AND FLEXIBILITY

When deploying new applications and business services, SDN enables the system to deliver speed and agility using existing components consisting of servers, storage and network switching. Programmability of these components is a key characteristic of a software-defining solution.

Another way to look at this is when various applications reside on servers that can be administered (delegated) when or as needed by the orchestration platform—while the associated server communications, instructions, process loading and data-steering throughout the network is being managed by the SDN.

SDN concepts are core components to making the emerging studio video over IP (SVIP) systems work using common Ethernet switches that can be configured per specific standards, some of which are currently in development.

Karl Paulsen, CPBE and SMPTE Fellow, is the CTO at Diversified. Read more about this and other storage topics in his book “Moving Media Storage Technologies.” Contact Karl atkpaulsen@diversifiedus.com.

Get the TV Tech Newsletter

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

Karl Paulsen recently retired as a CTO and has regularly contributed to TV Tech on topics related to media, networking, workflow, cloud and systemization for the media and entertainment industry. He is a SMPTE Fellow with more than 50 years of engineering and managerial experience in commercial TV and radio broadcasting. For over 25 years he has written on featured topics in TV Tech magazine—penning the magazine’s “Storage and Media Technologies” and “Cloudspotter’s Journal” columns.