Getting a Handle on the Power

Tomorrow’s broadcast equipment rooms may look more like today’s data centers . As broadcast equipment technologies move closer to information technology-based systems, the way in which we design, build, monitor and account for operational costs is expected to change in near lock step with the technology we’re going to be building—or already are. With operational costs continuing to climb, items such as power, cooling, real estate footprints and even redundancy are likely to be watched more closely than ever in the past.

Many of the considerations for cost monitoring and control are somewhat obvious. For example, the overall power usage of each equipment rack determines the amount of cooling required; but that’s not the only factor in understanding cooling and the power that it takes to drive that cooling. As these spaces begin to look more like data centers than broadcast central equipment rooms (CER); the techniques used by data centers to control factors like Power Usage Effectiveness (PUE) become sectors we will continually need to manage.

VIRTUALIZATION ATTENTION

Efficient utilization of the myriad servers now being installed in the CER can have direct impacts on balancing the power usage and practicality of each system they support. This is why “virtualization” is getting more attention now than in the past. Many of the services used in the broadcast plant are seldom turned up to full capacity. Products that were once available only in a dedicated box are now being ported to a software-only domain where they can be loaded onto on-premises, common off-the-shelf (COTS) hardware—or even placed into the cloud.

Since the main point is to get the best use out of each device, there will eventually come a point where groups of servers, which have dedicated functions—but only run 25–35 percent of the time—will become consolidated and managed by systems that allocate the services on a needs-or-demand basis.

For example, if the functions of three servers are running at peak only 35 percent of the time, it is potentially possible that you can take those services and run them on a single server by throttling the workload and managing the utilization from a virtualized, pooled resource perspective.

UTILIZATION FACTORS

Media asset management systems and news editorial systems are notorious for throwing all sorts of servers into their workforce, but really not getting much more than 50-percent utilization during average work periods. Granted there are times when certain functions require those servers to run at nearly 100 percent; but that’s when the virtualization environment makes its best showing.

Assuming there are 30 servers in a broadcast facility (and that, frankly, is now a low number) for this example, picture the server mix as a group of 10 servers doing ingest, another 10 doing processing and the last 10 are parsed between output-conforming and shuffling media to/from storage. Ingest is likely to be unpredictable, so expect those 10 servers to be dedicated 80–90 percent. The processing servers are entirely workflow-governed. Processing probably gets the lowest utilization (or “loading”) percentage, say in the neighborhood of 20–25 percent.

Finally, those servers dedicated to do output-conforming and media shuffling aren’t much better at resource utilization than processors, so they run, for example, at only around 35–50-percent loading.

It doesn’t take a lot of rocket science to see that the idle time of better than two-thirds of the servers is greater than 50 percent. So why not consolidate the services to less servers and begin to cross utilize the hardware by finding software that can distribute the loading across only 20 servers?

If the services of processing (i.e., transcoding or proxy or quality control) and those used for output-conforming could run on the same servers, then the utilization efficiency of those 10 goes from 20–50 percent up to 55–75 percent. This leaves plenty of overhead to turn up one set of services during a peak time, or turn down other services and shift the application resources to other servers that are less loaded.

SPREADING EFFICIENCIES AROUND

If you could distribute this concept across even half of the servers in a modern IT-based facility, the utilization efficiency increases and the amount of wasted power consumed by the servers when doing “virtually nothing” goes way down. In the average data center, about 50 percent of the power consumed goes to the compute side (i.e., servers doing their job). Over one third of the power goes to cooling those servers with the remaining 17 percent of the power going to other items including lighting and losses due to electrical wiring (as “IR-loss”) and such.

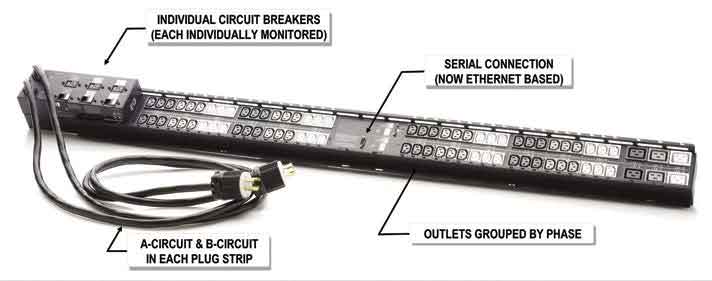

Fig 1: Monitored power distribution unit (PDU) or “plug strip” with serial or Ethernet monitoring, which reports the voltage, current and power factor for each outlet; and the inlet power for utilization comparison and loading per device. What can be done to monitor and control these (in)efficiencies? One solution is to install PDUs (power distribution units, aka “cabinet distribution units”), in the data center, which can monitor each power outlet and each branch circuit (Fig. 1). This data is fed to a building management system (BMS), which can then keep track of each device in the CER by time and consumption in many dimensions.

In this model, it is also important to measure the current, voltage and power factor at the inlet side of each power strip using a technique known as “per inlet power sensing.” This lets the BMS understand power in versus power used.

PEAK LOAD OR LOW USAGE

Once monitoring is configured, each device can be tracked against time and workload. Since the power draw on a server is proportional to the amount of computing that occurs, a simple power monitoring application—typically found in a BMS—can then determine when peak loads or low utilization occurs. Over time, as opportunities come to transform the “single function per device” into a “shared resource,” there may be a point where shifting certain services to different times or reallocating the functions of a pool of servers to a virtualized set of services can change the power efficiency utilization curve.

If you live in an area where part of the commercial utility bill is based upon “changes” in demand, the leveling of the power usage across the most expensive power periods could make a difference in your overall utility bill since power loading and cooling could be spread more uniformly across the day or night. Reducing the changes in the power delivered can make quite a difference over the course of a billing period.

These concepts may not seem especially easy to understand or may not make much sense today, but over the long haul and as conditions change, the practicality in adding power and building monitoring capabilities could make a difference in the operational costs of the CER or data center. Most new “greenfield” facilities are already putting the extra monitoring capabilities in place today, knowing well in advance there may be secondary advantages that will come into play years later.

Karl Paulsen is a SMPTE Fellow and chief technology officer at Diversified. For more about this and other storage and media topics, read his book “Moving Media Storage Technologies.” Contact Karl atkpaulsen@diversifiedus.com.

Get the TV Tech Newsletter

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

Karl Paulsen recently retired as a CTO and has regularly contributed to TV Tech on topics related to media, networking, workflow, cloud and systemization for the media and entertainment industry. He is a SMPTE Fellow with more than 50 years of engineering and managerial experience in commercial TV and radio broadcasting. For over 25 years he has written on featured topics in TV Tech magazine—penning the magazine’s “Storage and Media Technologies” and “Cloudspotter’s Journal” columns.