Managing lip sync

Technological progress often seems to occur haltingly: two steps forward, one step back. With the advent of digital processing, video processing began to take longer than audio processing, and the lip sync issue has become critical. Some consumer electronics manufacturers deny that there's any issue at all, believing (or pretending) the difference in their units to be imperceptible. Knowing how to measure A/V delay and how to compensate for it have become increasingly important.

Sensitivity well known, but not precisely defined

The characterization of sensitivity to the alignment of sound and picture includes early work at Bell Laboratories. For film, ITU-R Recommendation BR.265-9 (and its earlier versions) states that the accuracy of the location of the sound record and corresponding picture should be within ±0.5 film frames, or about ±22ms.

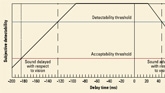

In 1998, ITU-R published BT.1359, recommending the relative timing of sound and vision for broadcasting. Studies by the ITU and others have suggested that the thresholds of timing detectability are about +45ms to -125ms, and the thresholds of acceptability are about +90ms to -185ms. (See Figure 1.)

Other research shows similar but not identical results — and being a function of human perception, we should expect the results to vary. The ATSC Implementation Subcommittee IS-191 has found that under all operational situations, the sound program should never lead the video program by more than 15ms and should never lag the video program by more than 45ms (±15ms). According to the IS, BT.1359 “was carefully considered and found inadequate for purposes of audio and video synchronization for DTV broadcasting.”

Notwithstanding this and other work, some TV manufacturers still claim that there is no data available to provide a normative reference.

Many sources of the problem

Anywhere video is processed, there will be a delay in the signal. Processing filters, format conversion, compression — all of these will add delay to the signal, perhaps as little as a few pixels or one line of video, or perhaps as long as many frames of video. Although faster processors and clever algorithms can minimize these delays, they can never completely eliminate them.

Even a simple digital filter requires “taps” or coefficients in order to operate, and that means some order of delay to the signal. Cascade enough of these systems, and the delays can add up. Ignore the delays, and you have audio and video out of sync.

Compressed video brings yet another difficulty to the scene — variable delays. Since the amount of compression varies with video material, the instantaneous compressed bit rate (bits per frame, for instance) will vary as well. In order to use bandwidth efficiently, the rate needs to be smoothed to an overall constant bit rate, and that means that the delay will vary.

The delays in a well-designed system should be known (to the designer), and should be compensated between audio and video.

In a compression system, such as in Figure 2 on page 28, the encoding delay (“A” to “B” in the figure), is not precisely known, due to the nonconstant instantaneous compression rate. Similarly, the decoding delay (from “C” to “D”) is not defined. Nonetheless, the entire system works (if designed properly), because of the time stamping mechanism, such as that used in the MPEG standards. Thus, the delay from “A” to “D” should be fixed, and the presentation of audio and video should be aligned if the encoder and decoder are both operating correctly.

However, proper decoder timing reconstruction is not required for compliance. There is no “timing conformance” that must be demonstrated to any authority in order to build or license an MPEG- or ATSC-compliant product. And several experts believe decoders may be a significant contributor to the problem.

Yet another problem arises if bit stream splicers are used to feed the transmission chain. In that case, the A/V delay can actually jump to a different value when the new stream is spliced in. On the display side, video processing delays become significant for LCD and plasma display panels (PDPs), where memory-based video-processing algorithms, as well as panel response times, can cause a delay of more than 100ms.

Measurement, correction tools emerging

Various technologies currently exist that can analyze, measure and correct lip sync error. One measurement system uses a special test signal that synchronizes a video “flash” and audio tone burst. The two signals can be monitored on an oscilloscope to determine the delay between them. Of course, this process is intrusive and cannot be done with on-air programs.

Another scheme uses an active element to tag the audio and video at an upstream point, which sets a reference for the A/V alignment. These tags are then sensed downstream and compared to the initial reference. Any difference in the timing is then relayed to the operator. In more sophisticated systems, the accumulated delay can be signaled to a corrective device to compensate for the differential delay, usually by altering the audio delay in a memory-based digital delay line.

One nonintrusive method of tagging is to use watermarking technology to embed timing data within the video itself. Tektronix had such a device called the AVDC100 audio-to-video delay corrector on the market years ago. The watermark was claimed to be permanent, surviving compression and other types of video processing. Unfortunately, the unit has been discontinued, apparently due to lack of interest in the product.

Other products use various proprietary schemes to measure — but not actively correct — video delays. The JDSU DTS-330 real-time transport stream analyzer with SyncCheck provides lip sync analysis when used with a special test tape video source. The K-WILL QuMax-2000 generates a “Video DNA” identifying signal that can measure the timing of video signals in a plant or even at separate locations. The Pixel Instruments LipTracker detects a face in the video and then compares selected sounds in the audio with the mouth shapes that create them in the video. The relative timing of these sounds and corresponding mouth movements are analyzed to produce a measurement of the lip sync error.

Several units can correct varying delays automatically, such as the Pixel AD3100 audio delay and synchronizer, which provides compensation based on a control input from a compatible video frame synchronizer. It can also automatically correct independent variable delay sources by interfacing with the company's DD2100 video delay detector, which samples the video at two points in a system and then provides a control signal to the AD3100 audio delay unit. Similarly, the Sigma Electronics Arbalest system uses a proprietary technology to provide automatic video delay detection and audio compensation in a system.

Industry activities to address solutions

Aside from a handful of proprietary solutions, no standard solution has yet been described. It is becoming apparent that end-to-end solutions are needed, and several trade groups are actively studying the problem. SMPTE has created, within the S22 Committee on Television Systems Technology, an Ad Hoc Group on Lip Sync Issues to address the problem and produce guidelines documents. Work on a coordinated studio-centric solution will probably include problem assessment, current practices, control signals, and known and potential solutions.

The ATSC's recently developed strategic plan notes that although the AC-3 digital audio standard is mature, implementations have varied, in particular with regard to lip sync and sound levels. The ATSC Technology and Standards Group on Video and Audio Coding (TSG/S6) has thus been directed to look into these issues, and has established two working groups to gather implementation data and report back with recommendations. It is believed that the group will coordinate with SMPTE and concentrate on emission stream issues.

Other groups are studying the issue, as well. In Canada, World Broadcasting Unions International Satellite Operations Group (WBU-ISOG) has conducted tests on satellite encoders and decoders. An EBU audio group has performed tests for SDTV receivers. In Japan, the Japan Electronics and Information Technology Industries Association (JEITA) IEC-TC100 has started investigations on TV receiving devices.

The entire problem is quite complex when one also considers the effects of editing, voice-overs and other routine operations. In addition, practical feedback to controlling systems must be developed, or an unstable condition could be created. Beyond the technical issues, there exists the potential for technical (and legal) disagreements. Let's hope that the motivation for a better viewer experience trumps that and provides a genuine improvement.

Aldo Cugnini is a consultant in the digital television industry.

Send questions and comments to:aldo.cugnini@penton.com

Get the TV Tech Newsletter

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.