New Rec for Studio Video Over IP Approved

Editor’s note: The headline on Wes’s contribution originally said a new “standard” had been approved. TV Technology regrets the error.

ORANGE, CONN.—On Oct. 21, the Video Services Forum published TR-03 “Transport of Uncompressed Elementary Stream Media over IP” to define an interoperable way to format and identify media streams for IP network transport. This recommendation was developed specifically to address the need within modern media production facilities to have an efficient, flexible method to transport uncompressed signals of various types.

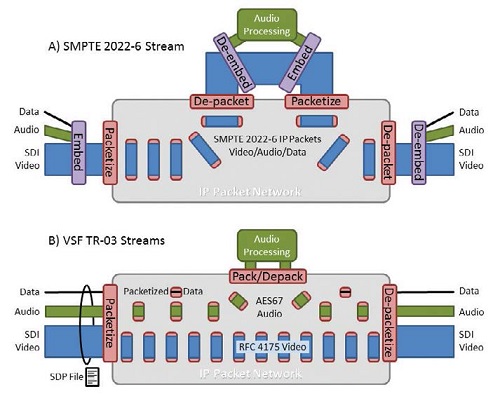

Previously approved standards, such as SMPTE 2022-6, require video signals to be first formatted into SDI (Serial Digital Interface) streams before they are placed into IP packets. Related audio and metadata signals are commonly embedded in the horizontal and vertical ancillary spaces (HANC and VANC) that are present within the SDI signal. This is somewhat inefficient, due to the need to de-embed the individual signals from the SDI stream before they can be processed, and then possibly re-embed them before being passed along to the next step in the processing workflow.

DIFFERENT APPROACHES

With TR-03, each media type is transported individually as elementary streams. In the case of audio signals, the raw audio samples are placed directly into RTP/IP packets using AES67. For video, pixels from the active picture area are placed directly into RTP/IP packets in accordance with IETF RFC 4175. Metadata is handled using another IETF-proposed standard “draft-ietf-payload-rtp-ancillary-02.”

Chuck Meyer, chief technology officer, production for Grass Valley, noted the significance of the new standard. “TR-03 is very much about essence, and really separating out the media types be it video, audio, metadata and timing events,” he said. It’s just so important to keep those separate.”

Fig. 1 shows the different approaches used by 2022-6 and TR-03. Fig. 1 shows the different approaches used by 2022-6 and TR-03. Part A shows audio and metadata being embedded into an SDI stream that is then packetized using 2022-6 and sent through an IP network. Part B shows audio, video and metadata each being packetized into separate IP streams in accordance with TR-03. The crucial difference between these two approaches is illustrated in the steps needed to perform an audio processing function (such as loudness adjustment). In the 2022-6 process, the entire video stream must first be de-packetized and then the audio signal must be de-embedded from the SDI stream. When processing is completed, the audio must be re-embedded in the SDI before the SDI signal can once again be packetized. Contrast this with the TR-03 process shown in Part B of Fig. 1, where only the packets containing audio samples are required to be depacketized, before they are processed, and then repacketized back into an IP stream. Not only does this process remove the need for audio embedding and de-embedding, it also greatly reduces the volume of packet traffic that needs to be routed to the audio processor. As an added benefit of TR-03, note that only the active video pixels of TR-03 need to be packetized, thereby reducing the amount of network traffic generated by uncompressed video.

TIMING AND IDENTIFICATION

Two important elements that underpin TR-03 are timing and identification. Timing is handled by distributing a common clock to every node on the network using IEEE 1588 Precision Time Protocol, and by referencing all of the media clocks to the SMPTE Epoch defined in 2059-1. Streams are identified, and, more importantly, associated with each other using SDP (Session Description Protocol; IETF RFC 4566 and The SDP Grouping Framework; IETF RFC 5888). Using these capabilities, audio and video streams can be identified as belonging to the same lip sync group and be referenced to a common clock so that they can be sent as separate IP packet streams through a network and then synchronized at the streams’ destination. Going back to Part B in Fig. 1, once the video, audio and data streams are synchronized at their source using a common clock, the streams can take different paths, with different amounts of delay, yet still be able to be realigned to a common clock at the output. An SDP file is created to indicate that the video, audio and data signals shown in Part B in Fig. 1 are part of the same lip sync group; the information in this file tells the receiving device where to find the streams and how to synchronize them.

When asked why a new standard is needed at this time, John Mailhot, CTO networking and infrastructure for Imagine Communications said “IP is a new infrastructure for the industry. 2022 has a place for interconnection between facilities and between rooms. The SVIP group was formed to address needs in the production studio. TR-03 has the flexibility that is a paramount need for this application.

“TR-03 can deliver the promise of IP networks,” he said. “It creates an extensible way to organize video, audio and metadata that can deliver all these media types in a media-agnostic fashion.”

There already appears to be a great deal of interest in this new TR, at least according to Meyer. “People noticed my name on the list of contributors to TR-03 and are calling me out of the blue—this never happened to me before. They are saying ‘Hey Chuck this is a really a cool thing. I think I’m seeing how this could really help me with my 4K transitions. Can you give me more technical details?’”

A copy of the TR-03 Draft Recommendation can be downloaded at www.videoservicesforum.org/technical_recommendations.shtml.

Wes Simpson is a member of the SVIP team that developed TR-03 within the VSF, along with representatives from dozens of other companies within the media industry.

Get the TV Tech Newsletter

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

Wes Simpson is President of Telecom Product Consulting, an independent consulting firm that focuses on video and telecommunications products. He has 30 years experience in the design, development and marketing of products for telecommunication applications. He is a frequent speaker at industry events such as IBC, NAB and VidTrans and is author of the book Video Over IP and a frequent contributor to TV Tech. Wes is a founding member of the Video Services Forum.