Next-generation broadcast: more bandwidth for the future

The development of new broadcast technologies that offer higher efficiencies and/or performance is an ongoing quest, with researchers continuing to push on methods to achieve a higher data throughput. The result is that the hardware and software providing media delivery will continue to evolve. On the broadcast side, modulation and compression are key components of a next-generation broadcast system.

Digital modulation

Digital modulation customarily takes the form of transmitting symbols that are mapped from groups of bits. These symbols can be sent using one of a variety of modulation schemes, including VSB, QAM and COFDM. Because reception is usually limited by channel noise, interference and distortion, there is a certain carrier-to-noise (C/N) ratio that is required for error-free reception. For this reason, the symbol rate is usually limited in a channel, regardless of the number of different symbols (or constellation points) that can be transmitted.

But there are ways of pushing this limit, either by clever channel coding, or by changing the necessary transmit power. New error correction schemes are constantly being developed — pushing the envelope to its theoretical limit and making higher-order constellations like 256QAM possible. But another way to maximize channel usage is by adopting a “cellular” approach, where multiple transmitters would blanket an area, each with a lower power than a single one, but able to get a stronger signal to receivers — yielding a higher C/N ratio and thus increasing the potential throughput.

Adaptive transmission technologies are also gaining interest in the research community. The idea is to get feedback of instantaneous channel-state information from a number of receivers, and use this to dynamically modify characteristics of the transmission system — including output power; transmission architecture (i.e., number and individual power of active transmitters); and even modulation parameters. Any one of these systems would represent a radical change in broadcasting infrastructure and federal regulations, but could potentially generate a sizeable increase in available bandwidth.

Video compression

As you read this, the latest MPEG compression specification, High-Efficiency Video Coding (HEVC), was due to be released as a final draft international standard, called MPEG-H Part 2 and ITU-T H.HEVC (and also referred to as H.265). HEVC promises to increase coding efficiency by up to 50 percent compared with AVC/H.264.

The HEVC specification is organized into profiles, tiers and levels, allowing equipment of varying complexity and cost to fit into well-defined architectures. As with its MPEG predecessors, an HEVC profile is a subset of the entire bit-stream syntax, and a level is a specified set of constraints imposed on values of the syntax elements in the bit stream. The constraints may be limits on values, or arithmetic combinations of values (e.g., number of pixels per frame multiplied by number of frames per second). Currently, three HEVC profiles have been established: Main, Main 10 (with up to 10 bits per color), and Main Still Picture.

HEVC introduces the concept of tiers within each profile, which carry their own constraints; tiers were established to deal with applications that differ in maximum bit rate. A level specified for a lower tier is more constrained than a level specified for a higher tier, and levels are similarly constrained within the tiers.

Audio compression

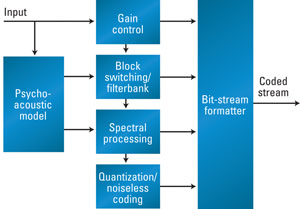

High-Efficiency Advanced Audio Coding (HE-AAC), as shown in Figure 1, has already been adopted into various transmission systems, including ATSC, DVB and ISDB. HE-AAC is based on the MPEG-2 AAC Low-Complexity profile in combination with Spectral Band Replication (SBR). MPEG-2 AAC is non-backwards-compatible with the other parts of MPEG-2 and MPEG-1 audio, including the so-called MP-3 format.

These earlier MPEG codecs, as well as AAC, use an architecture that combines a psycho-acoustic model, spectral processing and quantization. MPEG-1 and MPEG-2 spectral processing used mapped samples that are either sub-band samples or DCT-transformed sub-band samples. A psychoacoustic model creates a set of data used to control the quantizer and coding, wherein the production of coding artifacts is modeled at the encoder. The model relies on characteristics of the human auditory system, whereby quiet sounds within “critical bands” are masked by louder sounds.

Figure 1. MPEG Advanced Audio Coding incorporates elements from earlier MPEG standards.

MPEG-2 AAC also uses a psychoacoustic model, spectral processing and quantization, but with newer tools. A modified discrete cosine transform (MDCT) is used in the spectral processing step, and noiseless coding is performed on the spectral data.

Used in HE-AAC, SBR is a tool that can save coding bandwidth by allowing the codec to transmit a bandwidth-limited audio signal. The process is based on a decoder re-synthesis of the missing sequences of harmonics, previously truncated in order to reduce the data rate. A series of tonal and noise-like signal components is controlled by adaptive inverse filtering, as well as the optional addition of noise and sinusoids. Side-data in the bit stream can also be transmitted in order to assist in the reconstruction process. Typical data rates used for HE-AAC are 48kb/s to 64kb/s for stereo and 160kb/s for 5.1 channel signals.

HE-AAC version 2 (v2) is a combination of HE-AAC and Parametric Stereo (PS). The PS coding tool captures the stereo image of the audio input signal with a small number of parameters, requiring only a small data overhead. Together with a monaural downmix of the stereo input signal generated by the tool, the decoder is able to regenerate the stereo signal. The PS tool is intended for low bit rates.

Spatial Audio Object Coding (SAOC), is a recent MPEG-D coding tool (which can be implemented independently), wherein the different sound sources within a transmission are treated as separate coding “objects.” MPEG SAOC for Personalized Broadcast Systems is one potential application that can provide audio-related interactivity to consumers. Interactivity for broadcast applications can enable users to adjust TV sound according to individual preferences, or can even offer a hearing-impaired audio service without an additional audio channel.

Broadcasting with SAOC could allow users to adjust the volume of single audio objects within movies, TV shows or even live transmissions. For example, commentator and ambient sound levels during a sports broadcast could be adjusted by the consumer, as one means to improve intelligibility to suit one’s own hearing capability.

Hybrid TV is yet another area that is developing quickly. Combining a high-bandwidth, free OTA path with an Internet side channel, Hybrid TV makes new services available to home and mobile viewers. Specifications for Hybrid Broadcast Broadband TV (HbbTV) and other systems have already been published, with some trial services already deployed in Europe and Asia.

New tools to stay competitive

Transmission, modulation and compression schemes are being updated at such a pace that ongoing infrastructure updates are required to stay competitive with other forms of media delivery. The challenge is in adopting new technologies with as much backward-compatibility as possible, so as to not require consumers to constantly upgrade their hardware. From that standpoint, the entire media chain would benefit from those technologies that support “soft” equipment upgrades, and that requires close cooperation between broadcasters and consumer electronic companies.

—Aldo Cugnini is a consultant in the digital television industry.

Get the TV Tech Newsletter

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.