Perspectives, Panoramas, Processing

In life, we are surrounded by cues that make us aware of how we fit in a 3D space. Many of our senses and our reactions to that input are based on survival instincts—the ability to hear someone walk up behind us, to see movement in our peripheral vision, to sense our orientation when we move our bodies or swivel our heads. Our senses produce an awesome amount of data that we seamlessly interpret as we go through the day, getting our morning coffee, driving, walking through crowds, diving into swimming pools and everything that goes along with being a human in the world.

As the technologies and methods used in motion picture storytelling have evolved over more than a century of work, we've learned a new language, a new way to interpret the world around us based on a window into another world: the screen. On that screen, we've become accustomed to jumps in location, perspective, time and space. The screen is sometimes supplemented with surround sound, but usually we're left to our imagination to weave the data we get into human stories.

Close-ups, wide shots, panning, zooming, forced perspective, cutting and many other techniques are established parts of the storyteller's toolkit. Then along comes virtual reality and 360-degree, and suddenly we have a tendency to fall over reaching for things that aren't there. We lose perspective and we are lost.

While there are differences between VR and 360-degree content, both mediums deliver immersive experiences that, like "reality," are able to show events taking place all around the viewer. Because VR/360-degree imagery more closely represents the way humans experience the world—because their immersivity is further removed from techniques of traditional filmmaking—motion picture producers struggle with how to harness them in the service of telling stories.

LET'S GET PHYSICAL

There are several disconnects for us as viewers of virtual reality experiences. A primary one is that we do not physically navigate our way through the world in the VR viewer; we can spin around and look up or down, but we don't have all of the normal cues leading up to our appearance in the environment. We didn't walk into the scene, so we have to look around to get our bearings as if we just teleported in.

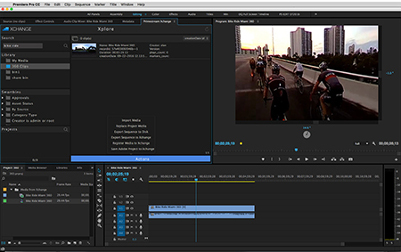

Primestream's Xchange Suite now includes support for equirectangular VR/360-degree asset management, enabling media playback and review, with "spatial/360" visual marker annotations to highlight areas of interest in a 360-degree space. Pictured is the Xchange Extension Panel for Adobe Premiere Pro Creative Cloud.

The next obstacle: we can't really move around in 3D space. We are restricted to turning our head to see what's in the 180-degree plane or 360-degree sphere of space directly around us. VR may give viewers some agency to navigate an environment, but it's generally not in the typical manner of walking over to something you see. So we're a little lost. As a result, it takes us time to orient ourselves in what is essentially a static scene with action taking place somewhere that we have to find.

VR is an evolving storytelling medium. The techniques directors have used for more than a century to guide viewers of 2D films are generally not applicable to storytelling in a VR experience. Directors can no longer rely on the fast cuts, zooming and pacing that have worked so well for so long. As a result, too much emphasis is sometimes placed on the novelty of the VR event and too little on inventing new techniques to tell stories in a changing world.

There is a lot of experimentation going on today, and much of it is on the capture side, figuring out how to collect better, more immersive images and stitch them together. We at Primestream are dedicated to managing those files. At its most basic, our tools enable a process where images are captured, descriptive metadata is added to those images, and then a workflow is organized around them.

This puts us right in the middle of efforts to understand how to better tell stories with these new assets, and discussions about the creative process. As creative tools like Adobe Premiere Pro evolve to meet the needs of editors using them, we are evolving methods to manage the data and metadata that adds value to those captured images.

360-DEGREE MAM

Media asset management (MAM) providers and NLE manufacturers have been chasing ever-changing formats for the past few years, so our workflows have evolved to include format independence, but there is always a limit to the assumptions manufacturers have made about the files they are processing.

A first step to handling 360-degree material is understanding how to deal with equirectangular files (360-degree spherical panoramas). A second step is understanding where there might be VR- or 360-degree-specific information that has been added to a standard format like MPEG. Finally, in order to facilitate an end-to-end workflow, it is important to forge partnerships and develop standards that will be supported in both capture and finishing toolsets.

One of the challenges we face is that capture and delivery formats are in flux, as are standards for how those streams are delivered. Gone are the days when a SMPTE standard for SDI controlled your network infrastructure. Newly built or modernized facilities are embracing file-based workflows and IP-based delivery.

With updates announced at this month's IBC conference, project-centric workflows enable Primestream users and teams to intuitively create, share and collaborate on projects inside Xchange Suite, or via the Xchange extension panel within Adobe Premiere Pro CC. Users can now organize raw footage or easily switch between proxy and high-res source material for effective remote/off-site editing.

In one sense, getting started is simple because manufacturers are leveraging existing formats like MPEG-4 or H.264, but in order to create content that's more immersive, cameras are pushing the limits of what they can capture. If you were happy with 4K before, now you need that resolution in 360 degrees. Each lens, each "eye" on the camera, is capturing 4K that needs to be stitched into a seamless sphere into which the viewer can be inserted. Can 8K per lens be far behind? You also want 3D spatial sound to create an even more connected and immersive experience. Accomplishing all of this requires spatial data and other markers.

We can't force the market in a specific direction, but will need to be there to support users when they arrive. We can participate in partnerships that seem to show directional leadership, like when a camera manufacturer and a codec supplier team up to create an end-to-end workflow, but ultimately we need to be reactive to changes in the marketplace that can come from anywhere. Will there be a shift from equirectangular format to cube maps, to pyramid maps, to something else? We will need to be there if and when it does.

We are no longer engaged in tracking content segments simply with time-based markers; rather, content needs to be marked up in both time and space with directional data—not only when but also where the interesting content is.

We fully expect the requirements for VR/360-degree asset management to continue to evolve as people figure out how to use it to perfect their storytelling techniques over time, and we know that the creative tools will need to move quickly to keep up with that innovative re-imagining of how we tell stories. We are not there yet, but you can already see the early signs that we are creating an exciting new creative narrative.

David Schleifer is chief operating officer at Primestream.

This story first appeared on TVT's sister publication Creative Planet Network.

Get the TV Tech Newsletter

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.