Reframing the Object Store

For some time we’ve thought mainly about how file-based storage is used to contain unstructured data; i.e., those files relative to moving (video) or static (photographic) images. File-based storage has traditionally been suitable for structured data—such as computer information when employed on personal computers or office workstations. This type of data can be appropriately organized, that is “structured,” for applications such as email, discrete sets of “office” documents, and project-related applications.

As “media”-related data came into the workforce, the storage of that data was also managed in the same way, despite the absence of true organization (or “structure”) to that data and no “best practices” for how to manage it. This type of media-centric data became known as “unstructured” data; a term which lives on through today.

In the early days of digital graphics (generated as individual files), photographic images (also individual files) or video (as contiguous groupings of inter-related files), few realized that digital media would expand to the degree it has in the past decade-plus years. Little work was done to address this eventual quantum shift in data storage needs and requirements. As linked sets of JPEG images for professional video media moved to streamed “strings” of compressed data, the volumes of files continued to grow in terms of both the formats and the quantities of actual content.

There were those who believed that asset management solutions might provide the needed organization of unstructured data. Those MAM-like processes however would still rely on traditional file-based storage solutions on the physical media (tape, hard drives, etc.), with content driving many new features and functions. Harddisk capacities increased, metadata became more important, better caching methodologies were developed, and transfer bandwidths grew to address the speeds and file-sizes of this new era.

When content creation exploded, driven by things such as digital cameras and personal mobile devices, and coupled with social media connections which now seem second nature, it became apparent that the overhead needed for file-based storage of media put huge bottlenecks in the processes of moving from one medium’s format to another. Another method for storage was needed—that methodology is called “object-based storage.”

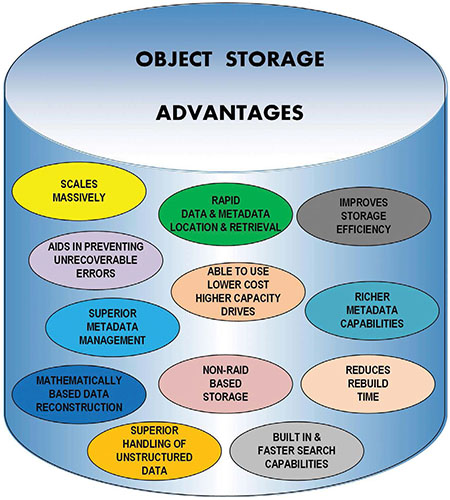

Fig. 1: Advantages in utilizing object storage for unstructured data in archiving, cloud and geo-dispersed applications.

ALTERNATIVE STORAGE

Object-based storage, which is specifically formulated to address unstructured data, is the new alternative to file storage. This relatively recent “object-technology” is built around the concept of extended metadata and collected sets of associated data. An object can be thought of as a container—a “wrapper-like” entity that captures the unstructured data and its applicable bits-about-the-bits (aka “metadata”)—and houses it in a storage space that is designed for easier retrieval than file-based storage (Fig. 1).

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

In object storage, each object will be assigned a unique identifier that enables servers to retrieve it from any physical location. This is a core principle in object storage which is how cloud-based data is managed on a global basis.

Object storage systems—sometimes called “object stores”—can be software-only or hardware based. Smaller stores are typically hardware-based, but large data center size solutions will employ software-based solutions that allow the storage to be deployed on a much broader basis.

SCALABILITY

Object stores provide infinite levels of scalability, something that traditional file- or block-based storage systems cannot equal. Still there are challenges in making object stores work in a block- and file-based domain.

Interfaces continue to be a key component in making file- and block-based external shared storage successful. The two external shared storage system protocols (block and file) have flourished primarily because they are as widely used and are as available as the networking interfaces that drive them.

Traditionally, block-based storage solutions have utilized Fibre Channel and Ethernet (iSCSI) as their interfaces. For file-based solutions, the interface is usually Ethernet (e.g., CIFS/SMB and NFS).

Due to issues surrounding data protection, indexing, and addressing in large-scale data repositories, block and file (and RAID) are not very well suited for data center size storage applications. Disadvantages include RAID’s inability to scale sufficiently (and efficiently) and file-based protocols that run into issues with metadata management when the storage volumes approach petabyte-sized data and/or contain multiple billions of files. We add, however, that some enterprise-class storage providers have developed high-performance provisioning for billion-plus files or when capacity reaches or exceeds five or more petabytes.

Despite its advantages as an answer to storing data at the multipetabyte level, user applications still expect to see the functionality of the more traditional NAS or SAN interfaces. These needs complicate object store integration, making them less than straightforward compared with using block- and file-based systems. Nonetheless, object stores have taken off in popularity, especially in the last 2–3 years. Object store providers offer a variety of options for making this relatively new storage form work with key applications.

DISPERSED STORES

Object stores offer a means to provide dispersed (locally in the data center) and geo-dispersed (across countries and continents) data protection. Object stores do this without RAID, using protection mechanisms typically employing a form of erasure coding—otherwise known as forward error correction (FEC).

Lost or corrupted data can be recovered using a subset of the original content which, through algorithms, let the system reconstruct those lost storage elements mathematically; often in the background and with minimal disruption.

Erasure coding is far more scalable than RAID and is more efficient (time wise) although at a cost of additional CPU overhead. From a business continuity/disaster recovery (BC/DR) perspective, users benefit from erasure coding by allowing these “subsets” of erasure-coded data to be distributed geographically in distant locations or on adjacent floors (or buildings) on a campus-wide system. Object stores also offer failure protection capabilities, another feature leveraged when installations have more than one location for their storage platforms. Failure protection (as background rebuild task) is an erasure-coding feature void of the complexities, significant down time delays, and risks encumbered when having to rebuild RAID arrays employing multiterabyte hard disk drives.

Storage systems always run the risk of data loss or corruption. Very large-scale data repositories face this problem just like smaller systems. Most spinning disk and solid-state storage media are reliable, but not totally error-free. When storage media does fail, it may be because of silent (unknown) corruption or issues that result from unrecoverable read errors (URE). This obviously places all the data at risk.

SCRUBBING THE DATA

A technique called data scrubbing helps to validate and then rebuild potentially corrupt or missing data. Erasure coding algorithms along with the typical “write-once” (read many) nature of object store data enables failed data to be recreated in the background, with little or no impact to operations. This is another reason why object stores are becoming the first choice for long term, deep archives—as well as near term cyclical storage.

In a future article, we’ll take the next steps in discussing configuration, performance balances, and the chief advantages to an object store’s rich metadata management.

Karl Paulsen is CTO at Diversified (www.diversifiedus.com) and a SMPTE Fellow. Read more about this and other storage topics in his book “Moving Media Storage Technologies.” Contact Karl atkpaulsen@diversifiedus.com.

Karl Paulsen recently retired as a CTO and has regularly contributed to TV Tech on topics related to media, networking, workflow, cloud and systemization for the media and entertainment industry. He is a SMPTE Fellow with more than 50 years of engineering and managerial experience in commercial TV and radio broadcasting. For over 25 years he has written on featured topics in TV Tech magazine—penning the magazine’s “Storage and Media Technologies” and “Cloudspotter’s Journal” columns.