Scaling Servers to Fit the Workflow

Karl Paulsen

PITTSBURGH — Systems focused on media now have more IT specifications in them than media components. The amount of computing horsepower necessary to perform operations on files such as media migration, transcoding or format conversion just never seems to stop growing. It wasn’t too long ago that the same perspectives were given to storage. Every time a facility owner turned around they needed another 100 gigabytes; which then grew to a few more terabytes in a matter of only a few years.

Today the story is about the server’s performance. With the number of operations increasing and the time to perform those operations shrinking, it is no wonder the number of the server components is soaring. Gone are the days of a single quad core processor supported by 16 GB of memory making up the entire complement of a media processing engine.

High performance server costs aren’t coming down much, either. Today, the life expectancy for the same server is no longer driven by the CFO’s capital expense directives; it’s by the value those servers provide with respect to what they can actually do for overall productivity that determines when they become obsolete to the organization.

In part, this is one of the reasons why the cloud is making sense to many users. Nobody ever asks what’s “in” the cloud. You don’t hear discussions about the number of cores in the server or how the storage system is protected (object, RAID or other). What matters most is the accessibility to the services and what services are available.

FUTURE REQUIREMENTS

Forgetting the cloud for this article, let’s explore what users need to know when all their file-based services remain in-house.

One thing designers need to do is figure out what the future is going to require. It can be fairly straightforward to look at today’s current requirements, and not too difficult to extrapolate that thinking into the next six months to a year. Trying to anticipate what will be necessary in year two or year three can be a real challenge—especially given the growth in so many areas and the expectations of what producers or content creators may want to do.

Architectures to support file-based workflows aimed at content preparation and delivery systems should be categorized by the overall scale which they must support. Simpler, all-in-one solutions might be acceptable for a short-term production cycle or for the delivery of pre-produced content for Web or similar distribution, as long as there is a clear set of defined deliverables that would be supported.

A typical system might include: one single type of content capture and post-production editing format; a limited set of NLEs (two to three workstations) employing a single collaborative workflow; and a straightforward release profile provided by a single transcode engine.

Users would expect constraints such as one to a few codecs, limited proxy (browse) components and not more than a few release versions for each of the codec formats.

Transcoding, which has become a big factor for any production service, is becoming a core component of the workflow. The scale of these transcode systems runs from single servers housing all the functionality to multiple servers that distribute services across several nodes.

For small systems, transcode system parameters are defined to support a modest amount of file-exchange or transactional volume and outputs derived from or delivered as Long-GOP files. They should be suitable for inbound high bit-rate files (including I-frame only) transcoded to lower bit-rate files (e.g., MP4) for delivery.

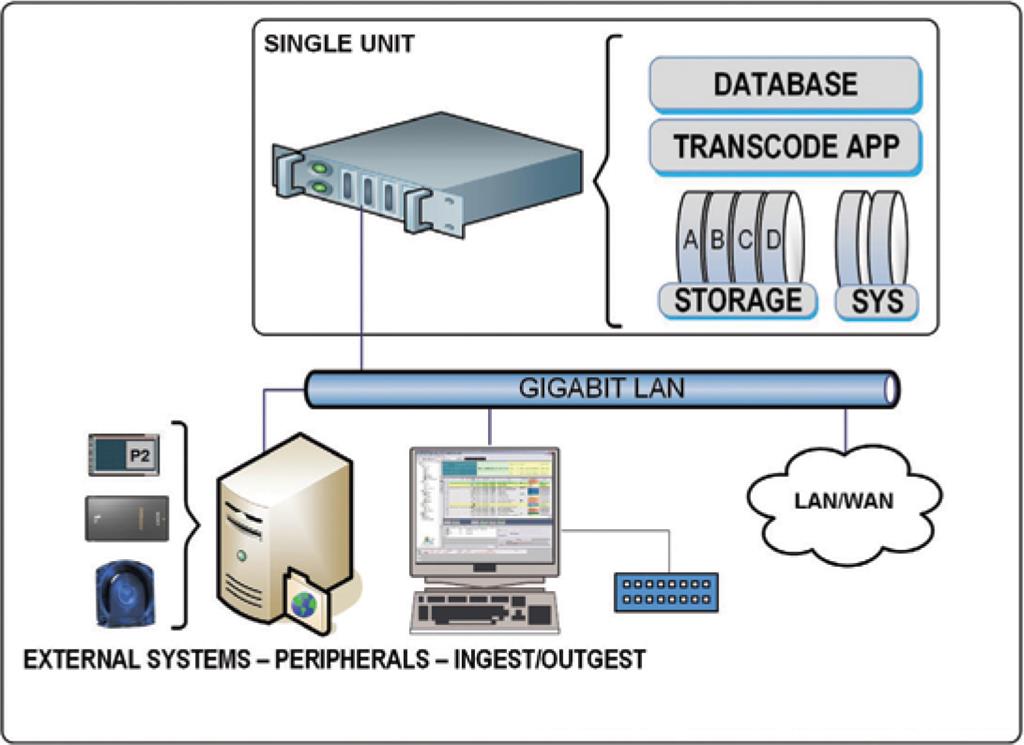

Fig. 1: Single server system

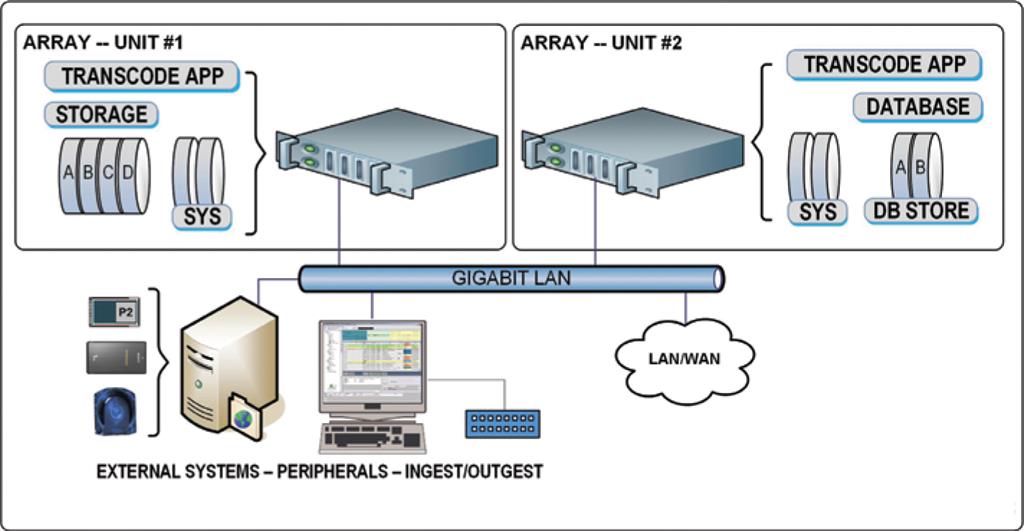

Fig. 2: Simple array shares storage and database among two nodes

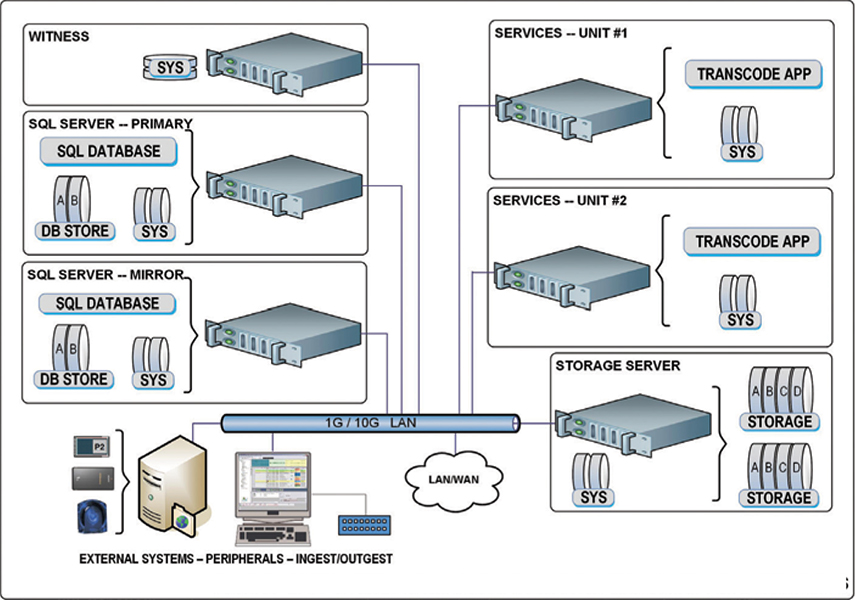

Fig. 3: Storage server with mirrored database, witness and two service nodes The all-in-one transcoding system (Fig. 1), is often built with lower-cost hardware, and typically runs on a single server with self-contained local storage with high-speed disk access.

At this scale, the system usually lacks a dedicated database server (e.g., no dedicated SQL hardware) and might be inhibited by a reduced numbers of transactions, some file size limitations and no redundancy or load balancing. Scalability could be constrained to its single-server node making the system unsuitable for workflows that require a high-volume of files or processes on a regular basis.

A similar system, from the release and transcode perspective, could be expanded to support a modest increase in transactions by adding another server configured as an array (Fig. 2). Like the hard-drive storage array, a transcode array now distributes its resources across nodes in order to support more activities, but this also comes with certain limitations. Depending upon the solution set, a transcode-array may not be suitable for high bit-rate I-frame only edit files.

CONSTRAINTS AND CONCERNS

Other constraints are governed by the storage availability (capacity and bandwidth) and the transfer speeds between each of the server nodes (e.g., GbE LAN with high traffic volumes that is also shared with LAN/WAN or other external devices including the editorial platform).

The two-node array brings other concerns despite its resources now being distributed across them. Factors include whether or not a single transcode node is also hosting local storage. In the case where the other node hosts both services and the system database (and depending upon what kind of database it is hosting), expect an increase in data traffic between the two nodes, which can burden the services capabilities on both servers. Of course the actual volume of activity occurring throughout the workflow taxes all the resources, and in turn can result in reduced performance under some conditions.

At this point, the “simpler” transcoding and post-production workflow configuration reaches the point where additional engines are needed to raise the bar up to a level that can handle more sophisticated workflows, additional deliverables and allow for resiliency.

The first step is to segment off the database server, which turns the services nodes into dedicated servers that process only media and enable more capabilities. Usually two services nodes are included, which then enables load balancing.

If the system uses a SQL or similar database, users will build up that system on one of two general configurations. The database server can certainly be a single server system, however most systems with any level of higher performance requirements (higher transactional volumes) would employ a main SQL server plus a mirror. When mirroring, users may add a third “witness” server that monitors the main and mirror SQL servers (Fig. 3).

Finally, two more elements should be considered. First, the system upgrade should include 10 GbE connectivity between the services nodes and the LAN; and must now include 1 GbE/10 GbE switches as well. Second, to allow the services nodes to do only image processing, an enterprise-class NAS should be added whereby images can be cached and managed separately from the transcode and processing services nodes. The NAS also resides on the LAN’s 10 GbE segment.

That, in a brief overview, provides a range of transcode solution sets from simple to modestly complex. Each come with pros and cons, and each incremental step brings the facility closer to providing more sets of services to users or customers. Beyond the fundamental configurations described, the “array configurations” take on dimensions that add metadata servers (main and backup), a topology change to Fibre Channel, and other additions including tier two and tier three storage solutions.

For enterprise-level file-based workflow implementations, these topics are just the tip of the iceberg.

This author wishes to thank Telestream for their insight into these architectures.

Karl Paulsen, CPBE and SMPTE Fellow, is the CTO at Diversified Systems. Read more about other storage topics in his book “Moving Media Storage Technologies.” Contact Karl atkpaulsen@divsystems.com.

Get the TV Tech Newsletter

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

Karl Paulsen recently retired as a CTO and has regularly contributed to TV Tech on topics related to media, networking, workflow, cloud and systemization for the media and entertainment industry. He is a SMPTE Fellow with more than 50 years of engineering and managerial experience in commercial TV and radio broadcasting. For over 25 years he has written on featured topics in TV Tech magazine—penning the magazine’s “Storage and Media Technologies” and “Cloudspotter’s Journal” columns.