Solid-State Highlights From CES

Karl Paulsen

January’s International CES offered more than just the latest in ultra high-definition 4K TV, OLEDS and every form of tablet and smartphone accessory on the planet. The show also included an offsite super session called “Storage Visions 2013,” now emerging as a must attend session held just prior to the official opening of CES’s exhibits. Organized as an Entertainment Storage Alliance Event, the focus for Storage Visions 2013 was on advancements in storage technologies aimed squarely at the OEM industry and users; and featured presentations by leading manufacturers, users and producers of storage systems and content.

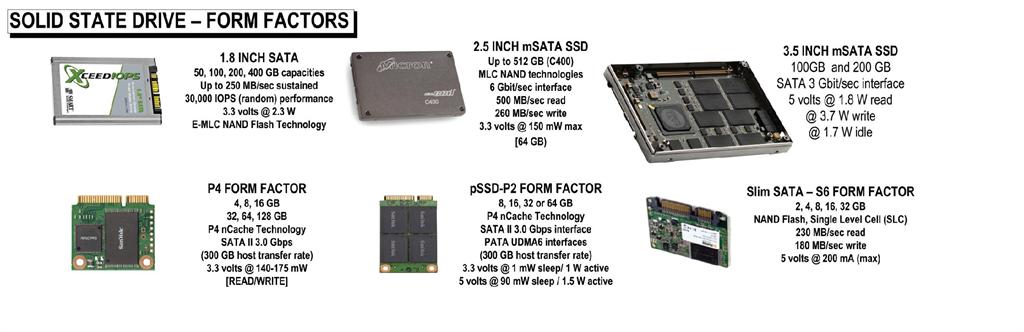

Of key interest was learning about where non-rotating media, in particular the solid-state drive, is headed. SSDs have reenergized the PC, and by 2015, the average capacity of an SSD-based PC is expected to double, approaching 270 GB per drive. Driven by ultra-thin laptops and tablet-based platforms, the growth of the SSD has already made its way into high performance computing (HPC) desktops for business and consumer applications. Next-generation SSD changes will find it landing deep inside the datacenter, where expectations for this storage form factor will provide numerous advantages, offer new promises and increase the usefulness of the cloud. For more information on the technology, see my column “Exploring Solid-State Drives” from the Aug. 7, 2012 issue of TV Technology.

SOLID STATE ADVANTAGES

SSDs have several advantageous factors. Presenters at Storage Visions emphasized that SSDs provide a “95 percent reduction in latency” over rotating media. Latency is that time between the issuance of a command to fetch data is given to when the data is readable, accessible and usable by the network or server. Reducing latency has huge impacts on performance, changing the architecture of systems from the CPU to the application.

SSDs have re-energized the PC, and by 2015, the average capacity of an SSD-based PC is expected to double, approaching 270 GB per drive.

(Click to Enlarge)

Other advantages of SSD, especially for large installations, include reduction in power consumption for solid state versus mechanical rotary storage. Collectively coupled with longevity, power reduction, increased life expectancies over mechanical rotary memory (hard drives), and overall physical space requirements, the datacenter may actually come out ahead by deploying SSD for storage in the long run.

Datacenters, whether built for corporations or as public clouds, may employ literally thousands of disk drives in hundreds of arrays per location. These storage systems not only consume an enormous amount of space, power and cooling, they also become a maintenance nightmare for those having to compensate for failed, offline or error-prone hard drives. Resilience requirements often require not only RAID 6 configurations per storage array, they need mirroring and replication of the storage at other locations. Such locations may be local (internal to the datacenter) or external, at geographically separated areas of the continent.

In a large datacenter, when a drive fails in an array, it is often left unreplaced. Given the huge volumes of drives in service, maintaining all the mechanical storage components become enormous. Negative factors include the rebuild time necessary once a bad drive is replaced plus the risks associated to new data that might be lost when presented during that vulnerable period when the RAID is only partially functional. By including or eventually migrating to solid state drives, the risks associated with mechanical failures is reduced considerably.

So the storage community is now looking at what NVM or “non-volatile memory” (i.e., solid state memory) can bring to the data center as a whole.

NO MORE WAITING

We’ve seen the performance of SSD increase six orders of magnitude (one million times) over HDD in just one generation, giving hope for continued improvements in the very near future. The speed of flash memory is approaching that of DRAM, allowing data to get “much closer to where it is needed,” which is at the processor itself, compared to a network attached or storage area network approach. What this does to the application is change how it must work generically. Applications heretofore relied on storage latency impacts which let CPUs wallow around waiting for data to get from point A to point B. This remarkable latency reduction means that apps will need to be redesigned in order to take advantage of the new speeds available from nonrotating media storage.

Costs for SSDs are now under one dollar per gigabyte. Even though SSD costs have continued to drop over past years, attendees were cautioned not to see the degree of price drops continue during 2013. However, what is coming is a steady migration of flash memory from SLC (single-level cell) versions to MLC (multiple-level cell). Today, 80 percent of all units are 2-bits/cell (4-levels) or 3-bits/cell (6-levels) of flash. TLC (triple level cell) memory, at 3-bits/cell, is now prevalent in mobile applications; and MLCs offer a higher bit density which will let a 300 GB SSD live quite comfortably in a 2.5-inch drive form factor.

One drawback could remain: The life expectancy of a flash memory device is reduced as the density of the memory bits increases. The life-cycle, defined as the number of “program erase cycles,” remains a concern for users of SSDs in large quantities. This further drives how the applications using solid-state storage must perform, since it is the SSD-controller that must manage the rough spots which occur over the life of the memory itself.

Other revolutions at Storage Visions, to be reviewed in later editions of this column, included a new approach to permanent data archive from Panasonic whereby Blu-ray data is archived on 100TB of RAIDed data in 6RU of space, consuming only 7 Watts at idle and 100 Watts total during read/write. Each 12-disc, 1.2TB Blu-ray cartridge supports up to six disc failures before data is totally lost. Another unique optical disc from Millenniata, called “M-Disc,” is a 4.7GB (DVD+M) disc designed to last 1,000 years. Using a conventional DVD burner, the M-Disc offers secure, lifetime data retention that does not require migration from medium to medium over time.

Another big eye opener was when members of the media and entertainment industry provided insight into their needs for storing and managing the overwhelming growth in data used not only professionally but publically. Of concern to all facets of the storage industry’s end users was that of handling metadata from origination through processing and out to distribution. While we may have provided channels for storing the explosion of collected data, it appears the greatest hurdle facing those who need an answer is what to do with the metadata.

Karl Paulsen (CPBE), is a SMPTE Fellow and CTO at Diversified Systems. Read more about these and other storage topics in his book “Moving Media Storage Technologies”. Contact Karl atkpaulsen@divsystems.com.

Get the TV Tech Newsletter

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

Karl Paulsen recently retired as a CTO and has regularly contributed to TV Tech on topics related to media, networking, workflow, cloud and systemization for the media and entertainment industry. He is a SMPTE Fellow with more than 50 years of engineering and managerial experience in commercial TV and radio broadcasting. For over 25 years he has written on featured topics in TV Tech magazine—penning the magazine’s “Storage and Media Technologies” and “Cloudspotter’s Journal” columns.