Storage Modernization With PCIe and NVMe

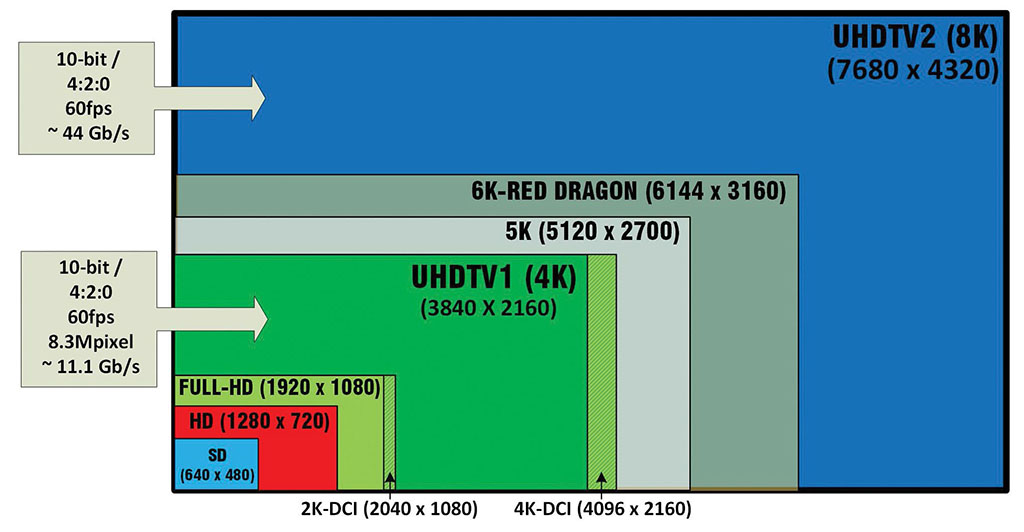

In the many changes in media occurring during this age of digital transformation, production facilities are facing decisions about whether to go IP, while trying to determine the impact of producing content in UHD-TV1 (4K) and above.

In January, the 2019 Consumer Electronics Show featured evidence that 4K is here, stable and “readily” available—while looking squarely at 8K (UHD-TV2) as the next great change in television displays of the future. Of course, with 8K comes a need for creating and delivering that content—and, as the cycle continues, a quantum shift in how to efficiently manage the changes required to the infrastructure.

Obviously, the volume of bits needed to produce all this new content won’t really get any smaller. To add to it, the need to produce good content for 8K likely means it is shot in high dynamic range and at a minimum of 4K in resolution to realize the value of the larger screens, which will depend upon upscalers for quite some time.

Fig. 1 shows just how image sizes will relate to increases in bit volumes in order to meet the requirements to deliver high-quality video in the future.

MULTIDIRECTIONAL SCALABILITY

Throughout this past decade, applications associated with SaaS, AI, VR, social media and image resolution have demanded that systems scale in multiple dimensions. Peak demands continually change, forcing previously unseen growth in data sets and its management. New frameworks are needed to address these new data-intensive workloads in proportions that legacy solution sets cannot meet.

System resources are now being decoupled, allowing them to be scaled independently and turned into services versus how they were treated heretofore. Those who relied on the “shared storage” model are finding there is insufficient I/O (input-output) performance and excessive latency (i.e., throughput boundaries) to meet new demands, which goes for network interfaces and servers as well as storage.

Flash-based designs, now approaching more than 20 years since their introduction, are reaching a peak in terms of serial data I/O and transfers. No longer is it efficient to simply replace a spinning hard drive with an SSD and then tweak in performance on a legacy SAS or SATA interface.

Today, the latest up-and-coming technology is Non-Volatile Memory express (NVMe)—a scalable host controller interface coupled to a storage protocol that accelerates data transfers between client and/or enterprise systems that utilize high-speed PCIe (Peripheral Component Interconnect Express) based solid state drives (SSDs).

COMMANDS AND QUEUES

At least two primary factors affect SSD performance—commands and queues. A traditional SATA device will typically support up to 32 commands in a single queue; with the SAS device supporting up to 256 commands in a single queue. Here is briefly how these two constructs work:

Based upon the anticipated workload and system configuration, host software will create “queues” (positions or slots of availability)—up to the maximum supported by a controller. Often this is regulated by the core processor and is limited in number to avoid locking and to ensure the data structures are created per the cache of the core’s processor(s) without encumbrances.

A circular buffer, known as a Submission Queue (SQ) with a fixed slot size is used by the host to submit “commands” for execution by the controller. Each SQ entry is a command. A Completion Queue (CQ) is another circular buffer with a fixed slot size that is used to post the status for completed commands. The number of queues varies by the application—enterprise applications range from 16 to 128, with client queues only between 2 to 8. Block sizes for both are 4 KB (and above with NVMe protocols).

LANE CHANGES AND GIGATRANSFERS

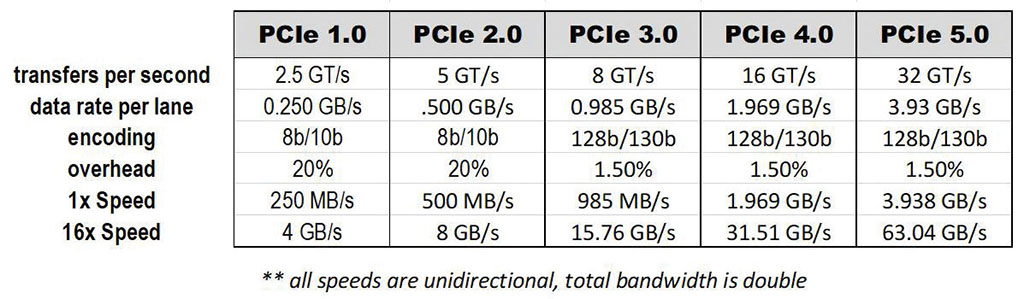

Understanding the true meaning of the PCIe solution set can be complex, given the variety of interface opportunities and the interdependency of the I/O to and from the device being attached to the bus. Calculating PCIe bandwidth can be even more challenging, especially when giving rise to new terms such as Gigatransfers (GT/s) that are interchanged with rates and speeds, such as gigahertz (GHz). Rather than look at the explicit details of each “PCIe generation”—we’ll provide some introductory information about the evolution of PCIe in a simpler perspective.

Platforms utilizing PCIe connectivity have continued to rise, moving from Gen1 (24 lanes) to Gen2 (36 lanes with a doubling of bandwidth), to an I/O performance of 1GBps per lane in Gen3.

A lane is a data-transmission link, which consists of two pairs of wires—one pair for transmitting and one pair for receiving. Consumer PCIe slots can be 1, 4, 8 or 16 lanes. Packets of data move across the lane at a rate of 1 bit per cycle. The 1x link (one lane) carries 1 bit per cycle in each direction (hence two wires per direction times 2). A 2x link (two lanes) utilizes eight wires and transmits 2 bits at once (per cycle) in each direction. The numbers grow with successive PCIe generations.

PCIe Gen1.x and 2.x use 8b/10b encoding, which results in a 20% performance overhead. The encoding converts an 8-bit data set to a 10-bit character set, thus a per-lane 250 MBps bandwidth can only carry 200 MBps. When utilizing the gigatransfers parameter from the gigabytes bandwidth number, the numbers have a non-uniform change that is best presented in a table, which reflects the encoding, transfer and speeds for comparison (Fig. 2).

SSDs supporting Gen2 with eight lanes, deliver over 3 GBps; doubling that on Gen3 interfaces to 6 GBps for a single device. Encoding is now 128b/130b, resulting in only 1.5% overhead. Latency is also reduced and the ability to directly attach to the chipset or to a CPU was also revealed.

IT DOESN’T STOP THERE

AMD announced at the 2019 CES it would be the first to support PCIe 4.0 at either the enterprise or desktop (client) levels. Ironically, in May of this year, the PCIe 5.0 specification (providing four times more bandwidth than PCIe 3.0) was announced even before Gen4 (PCIe 4.0) was shipped.

Just how far will this go? Why does the development continue when, for example, Gen5 essentially has the bandwidth of a 100 GbE (Gigabit Ethernet) connection—equivalent of about 63 GB per second at 16x speed? Certainly, home users don’t need these values and even enterprise users might question this proposition—especially given the limits of the SSDs they would connect to and the costs of the switch gear—irrespective of the continually decreasing costs.

One evolving scenario for these exponential data rate growths is that of the “always-on” (vs. the “always-connected”) compute platform level, which is taking shape with the always-on model seeming to lead the race because of battery life expectations. For the media and entertainment industry, i.e., those routinely creating UHD/4K content, storage refreshes can now take less space, require less power and cooling, and increase performance multifold over previous storage solution sets.

EMERGING PROTOCOL

Current storage system advances are now based upon the latest NVMe protocol. Utilizing NVMe with multicore processors aids in removing bottlenecks that conventional interfaces alone experience. NVMe brings about highly scalable new capabilities for accessing storage media at high speeds, which in turn is enabling more growth for data-driven marketplaces including media, video production and post.

When you’re in the refresh mode or considering moving to UHD production, take a look at storage solutions incorporating NVMe, especially those who offer the repurposing of existing SSD, which you may already own.

Karl Paulsen is CTO at Diversified and a SMPTE Fellow. He is a frequent contributor to TV Technology, focusing on emerging technologies and workflows for the industry. Contact Karl atkpaulsen@diversifiedus.com.

Get the TV Tech Newsletter

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

Karl Paulsen recently retired as a CTO and has regularly contributed to TV Tech on topics related to media, networking, workflow, cloud and systemization for the media and entertainment industry. He is a SMPTE Fellow with more than 50 years of engineering and managerial experience in commercial TV and radio broadcasting. For over 25 years he has written on featured topics in TV Tech magazine—penning the magazine’s “Storage and Media Technologies” and “Cloudspotter’s Journal” columns.