Streamlining Audio Dubbing with ML- and AI-Based QC

Today, content creators are increasingly looking to expand across geographies, but one of the biggest challenges they face is overcoming language barriers. Delivering content to non-native speakers is possible through captions and subtitles, or audio dubbing. Yet, quality control (QC) checks are imperative to ensure that everything flows smoothly. This article will examine why audio dubbing is better than providing subtitles, the key capabilities to look for in a QC system and how machine learning (ML) and artificial intelligence (AI) technologies are simplifying QC workflows for audio dubbing.

WHY AUDIO DUBBING MAKES SENSE VS. SUBTITLES

Subtitles are an easy and affordable way to deliver content to non-native speaking viewers, but they have limitations. For example, using subtitles, it can be difficult to deliver long dialogue scenes within limited screen times. Furthermore, subtitles can be distracting to viewers, since it makes them focus on the text vs. important details in the scene. Another reason why subtitles aren’t ideal is because they don’t express the emotions being delivered in the dialogue.

Audio dubbing to regional languages is an alternative option that translates a foreign language program into the audience's native language. Dubbed tracks are created by adding language-specific content to the original audio and have become a cost-effective solution for content creators to reach audiences in different areas of the world. When creating dubbed audio tracks, content creators must ensure high quality and synchronization with the video or original audio track.

HOW TO SIMPLIFY AUDIO DUBBING QC WORKFLOWS

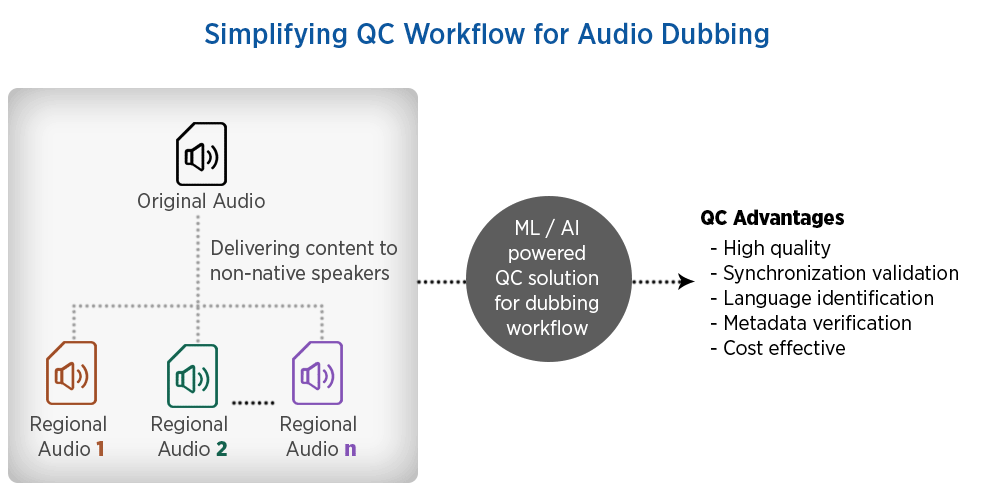

Recent advancements in ML and AI technologies have simplified the way that content creators perform quality control on audio dubbed tracks, automating the process of verifying languages and assuring synchronization.

There are several key capabilities content creators should look for in an ML/AI-powered QC solution for dubbing workflows in order to ensure the utmost efficiency, accuracy and quality of experience for viewers.

One important feature is the ability to verify dubbing packages with complex structure. The QC solution should be able to verify complex dubbing packages. Oftentimes, a dubbing package consists of multiple MXF and .wav files as opposed to a single file. The MXF file contains video tracks along with original audio tracks and .wav files corresponding to multiple dubbed audio tracks. Sometimes .wav files represent individual channels of a 5.1 audio track, or there are multiple audio tracks or channels encapsulated in container formats instead of .wav files. The QC solution should be able to handle package variations and verify the multiple dubbed audio tracks properly.

For audio dubbing, metadata verification is a must-have capability in a media QC solution. The system needs to verify the number of audio tracks or the channel configuration of multiple dubbed tracks, along with the duration of the original audio track compared with the dubbed audio tracks.

Language identification is an integral component of a QC system to ensure that each track has the correct intended language. Over the years, machines have become more intelligent and computing power more affordable, making accurate, automated language detection a reality. If the content creator has access to a few hours of audio content in the target languages, they can be used to train the ML models for language prediction purposes. Content creators can then verify that the language is correct by checking it against metadata.

During the audio dubbing process, content creators must make sure synchronization between video and dubbed tracks exists. This can be challenging, as there is no way to map the correct audio sequence for any video screen with dialogue. However, the majority of video content contains black frames and color bars that are designed to help in meeting requirements like synchronization. The corresponding audio sequence for black frames and color bars is silence and test tone, respectively. Choosing a QC solution that can verify the presence of black frames in video tracks as well as silence while color bars appear with test tone in dubbed audio tracks is critical.

Synchronization must also be present between original and dubbed tracks. This is no easy feat since audio data in master and dubbed tracks are completely different. It’s likely that common background music or effects were not used for the master track and dubbed track. These can be separated from the audio track using mechanisms like band pass filter.

Checking for loss of synchronization between background beds of dubbed audio tracks and the original audio track can easily be performed by a QC system with ML and AI technology. The challenge is ensuring a proper separation of background bed from audio tracks. One way this can be achieved is by comparing loudness curves. The technology will compare loudness curves, checking for mismatch between loudness values of original and dubbed tracks.

CONCLUSION

Through audio dubbing, content creators can reach audiences all around the world, providing content in their local language without the limitations of subtitles. Automating QC dubbing workflows speeds up this process immensely, while also introducing increased accuracy. When ML and AI technologies are added to the workflow, dubbed packages can be created even more rapidly, with reduced manual intervention. It’s a win-win situation for content creators and viewers. Content creators can expand their brand into new regions of the world, and viewers are assured a high-quality television experience in their native language.

Niraj Sinha is the principal engineer at Interra Systems.

Get the TV Tech Newsletter

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.