Taking Steps to Rebuild RAID

Anyone with any type of high-performance storage system for a video playout server, play-to-air system or nonlinear editing solution of any scale has probably experienced this. One of their disk drives fails completely or you get that error warning of “imminent drive failure—change drive ooooX3Hd immediately!”

Some may procrastinate and risk certain impact. Others take heed and elect to change the failing drive, and still another group sits back knowing they’d planned ahead and bought an extra hot-spare and that the drive controller system will take over without human intervention.

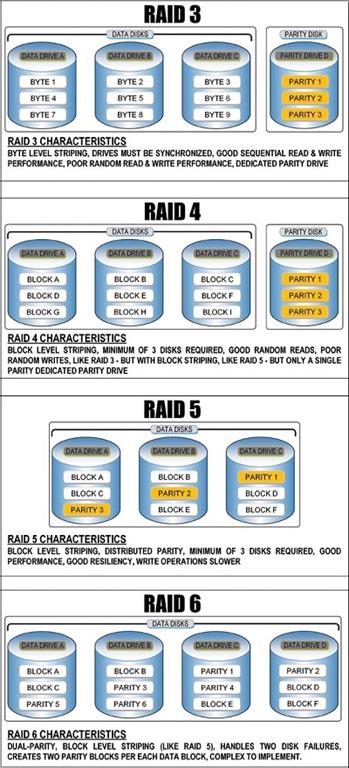

Fig. 1: Characteristics of selected Redundant Array of Independent Disks (RAID) levelsRESILIENCY TO DATA LOSS

When a hard drive in RAID configuration fails there is a period whereby the system fault tolerance or resiliency to data loss decreases. Depending upon the RAID level employed or the protection scheme in place, the risks may range from moderate to serious.

The most significant and primary concern to the system is if another drive in the same array fails. Such a loss compromises the entire storage system and renders all the data in that LUN, array or possibly the system useless.

When the protective element in either the dedicated parity drive (as in RAID 3 or RAID 4) or the secondary protective parity set (as in dual-parity RAID 6) are no longer available, the period between then and when the array is rebuilt and back on line, at 100-percent service level, can be dicey at best.

When a failed or failing drive is detected, the administrator/maintenance technician must first replace the bad drive, which in turn triggers a process called the “RAID Rebuild.” RAID rebuilding is the data reconstruction process, which mathematically reconstructs all the data and its parity complement, so that full protection (with fault tolerance) is restored, in essence returning the system’s resiliency back to a “normal” state.

Sometimes when certain data checks or other errors are detected by the controller, the drive array may go into a reconfirmation period whereby checksums and/or parity algorithms perform a track-by-track, sector-by-sector, block-by-block analysis on each drive. Ultimately, parity or checksums are all compared and/or rewritten and the array is then requalified to a stable, active state.

STEPS TO RECOVERY

RAID fault tolerance involves a number of steps. In one example, should a disk fail, the RAID controller attempts to copy the resilient data to a spare drive while the failed one is replaced. Using parity data and RAID algorithms, which vary depending upon the RAID Level, parity data is then reassembled back to either the dedicated parity drive (as in RAID 3 or RAID 4) or is distributed across all the drives, as in RAID 5 or RAID 6 configurations. See Fig. 1 for selected RAID characteristics.

For other RAID levels, should one of the main data drives fail and no active hot-spare is available, a new HDD must then be installed. Then data from the other remaining drives is reconstructed using data extracted from a dedicated parity drive or from the parity blocks distributed across the array, back onto the new drive. Either way, the risks during the rebuild time are elevated until the new drive is brought online with all the reconstructed data and parity elements having been restored.

Large-scale drive arrays, those with hundreds of spindles (HDDs) usually have sufficient overhead, intelligence and processing bandwidth to compensate for certain fault issues. Those which employ intelligent RAID controllers can also be proactive. Should the RAID controller suspect or detect that a hard drive is about to fail, the proactive controller may begin the process of RAID rebuild to either a (hot) standby drive or signal the user to replace the failing drive, or add another drive in an available slot so that the RAID rebuild process can be kickstarted before an actual failure occurs.

FAILURES DURING THE REBUILD TIME

One of the drawbacks to RAID—when in failure mode or during a rebuild process—is that the performance of certain applications or processes may be impacted due to system latency. System throughput—otherwise known as bandwidth—may be reduced because: (a) not all the drives are functioning; and (b) the rebuild process takes away the I/O speed while it rapidly moves blocks of data sets from the remaining active drives onto the new/replacement drive.

The reduced performance can be especially noticeable when the array is relatively small, i.e., when the number of spindles (drives) is low; or when the individual HDDs are very large.

As hard disk storage capacities continue to increase, rebuild process times will take longer and longer. In some cases, for drives in excess of one to two terabytes, the rebuild process can last from several hours to several days. Of course, during this period, latency and the risk of another failure increases, resulting in performance and usability becoming further compromised. This is one reason, among others, that high-performance storage solutions tend to use smaller capacity HDDs (e.g., 300 GB to 750 GB) and may put many more drives into a single chassis or array.

These issues and considerations become part of the selection and decision process regarding how to choose a storage solution. For the more advanced video server or mediacentric products, those built for mission-critical operations, many manufacturers have already taken these conditions into account and provide sufficient fault tolerance or resiliency to “ride through” most of the more commonplace maintenance, upkeep and failure situations.

Another technology solution involves the use of flash memory or more appropriately, solid-state drives to either supplement the array (as a cache or secondary storage tier) or completely replace the hard disk drive altogether. I’ll explore that topic in greater depth at another time.

Karl Paulsen is a SMPTE Fellow and chief technology officer at Diversified. For more about this and other storage topics, read his book “Moving Media Storage Technologies.” Contact Karl atkpaulsen@diversifiedus.com.

Get the TV Tech Newsletter

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

Karl Paulsen recently retired as a CTO and has regularly contributed to TV Tech on topics related to media, networking, workflow, cloud and systemization for the media and entertainment industry. He is a SMPTE Fellow with more than 50 years of engineering and managerial experience in commercial TV and radio broadcasting. For over 25 years he has written on featured topics in TV Tech magazine—penning the magazine’s “Storage and Media Technologies” and “Cloudspotter’s Journal” columns.