The Evolution of Digital Content Delivery

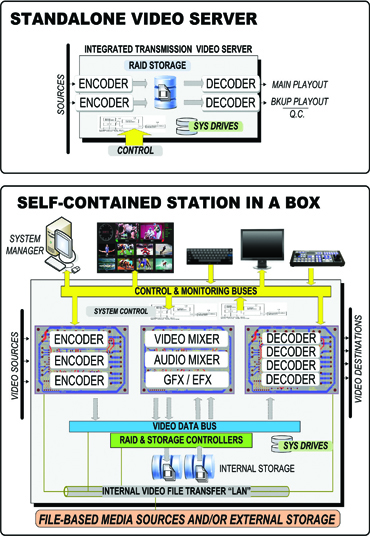

Conceptual architectures of an early standalone video server used from transmission, and its evolution to the modern self-contained “station in a box” built upon commercial IT-server hardware, internal and external storage, third-party video I/O cards and the integration of graphics, mixers and effects into a single platform.It was hard not to notice the dynamics of the broadcast and content creation industries at this year’s NAB Show. To picture what I mean, take a look at the past 10–15 years relative to how content is electronically captured, stored, transferred and reproduced. Next, look at the types of products that were revealed or expanded upon at NAB Show for each of these categories.

It doesn’t take long to see that devices, such as the “video server,” have not only been solidified to a commodity, but the principles and uses of moving isochronous video images when stored as digital files has touched a multitude of production, transmission and archival devices.

Many of the capabilities once dedicated to discrete boxes are now integral components of switchers, clip players and graphics generators.

It’s fair to say that today’s video server functionality, per se, can be primarily relegated to transmission purposes. These hardware- centric devices are built to deliver content to systems, such as master control for playout to air, with parameters that support mission critical or “must not fail” performance.

Such performance is achieved by providing highly reliable components, often in a redundant configuration, attached to optimally tailored storage platforms controlled by precision automation and timing devices that can provide 24-hour x 365-day delivery of assets for continued generation of revenue for broadcasters and content delivery organizations.

NEXT-GEN VIDEO SERVERS

Over the past dozen or so years, these technologies have been refined and massaged into a specific set of components that are essentially now cast in stone as to what they must do and how they must do it. Nonetheless, these high-performance transmission-centric video server platforms remain quite expensive.

In today’s rapidly changing media environments, the financial sides of organizations are constantly looking for alternatives that cut costs without the risk of sacrificing revenue loss.

This is where the next generation of video server surfaces. The concept of a “station in a box,” (SiAB) or “channel in a box” (CiAB) grew out of a desire to incrementally increase broadcast channels as DTV (the “dot” channels) started to emerge.

Layer on the plethora of specialty channels on cable and satellite and you create another dilemma: Can one afford to add additional or secondary channels that retain the reliability and look of the main channels?

The answer became obvious, and the manufacturers stepped up to the plate by condensing the two racks of equipment for traditional main channels to a single chassis that contained nearly all the elements of a broadcast station’s master control playout.

The SiAB concept drove the mainframelike video server into a much smaller form factor. What once was a set of encoders, system directors, decoders and storage was now condensed down to a few circuit boards and a couple of hard drives. Content could still be near line stored on the central storage system (where all the other content resided for the main channel) and then migrated to online storage for each SiAB.

The elements that managed this content are more akin to today’s IT-servers, coupled with station automation or traffic instruction/ playlist generators that make the decisions for upcoming and current playback to air. These IT-servers can now include branding elements, switching elements and other necessary system components such that essentially require only an Ethernet connection and an SDI output BNC (plus software) to interface to the remainder of the facility.

MOVING FORWARD

This brings us to the next dynamic of change; how content gets from origination to storage and out to playout. In the early days, video servers had integral and dedicated disk drives that needed Fibre Channel connectivity in order to support the bit rates and bandwidth requirements of a transmission system (including ingest, clip preparation, QC and playout).

As storage increased, more complex FC connections, switches and storage arrays were required. As the number of channels delivering or ingesting content increased, so did the number of drives, both for capacity and for bandwidth. Employing a SAN now became imperative, which escalated size, power, cooling and cost.

To maintain reliability, two sets of SANS with mirrored playout subsystems grew to necessity. Managing all these components eventually became more than one could handle so staff increased as did specific expertise in system administration, storage and video servers.

Moving forward, as network speeds increased beyond single gigabit dimensions, and enterprise-class hard drive technologies advanced, alternatives again began to surface that attempted to mitigate those issues identified in the previous paragraph.

Once again, the concept of an appliance (as a single dedicated device) began to appear in the form of “VTR-like” replacements. Video server manufacturers realized that a single chassis could contain sufficient input-output channels, plus storage and codec flexibility, to enable a selfcontained device to be the ingest and playout systems of a single channel with the expected reliability and performance of a mainstream video server architecture. And, at a respectable cost that incorporated modularity for repair, maintenance and upgrade capabilities, with these video server “appliances” now including networked, attached storage like their big brother counterparts.

Just as video servers have evolved, so has the content management and storage environment. Moving from SD to HD threw challenges at the marketplace, especially for the storage systems that now had to support four to six times the capacity and two to three times the I/O bandwidth.

Fortunately, storage technologies and capacities increased (with costs decreasing) faster than video codec and video server technologies did. This allowed manufacturers and users to benefit from the new HD requirements without the paralyzing effects witnessed at the dawn of the digital broadcast transition.

Today, we’re witnessing new compression formats that augment those same storage and delivery issues.

The added requirements of long-term preservation—protection, archive, legal and interchange—have again altered the dynamics of the video storage environment. The biggest changes we expect to see in the upcoming few years are in this arena. As users employ (LTO) tape to offline store content, they will face additional challenges such as format migration and media protection against degradation.

Decisions on what to keep, where to keep it and for how long will become monstrous tasks, if not already. And this is only the tip of the iceberg—the metadata dirty word still has yet to be effectively addressed from a universal, interoperable perspective.

So we’ll end here with that takeaway thought about our industry’s evolution.

Karl Paulsen, CPBE and SMPTE Fellow, is the CTO at Diversified Systems. Read more about other storage topics in his current book “Moving Media Storage Technologies.” Contact Karl atkpaulsen@divsystems.com.

Get the TV Tech Newsletter

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

Karl Paulsen recently retired as a CTO and has regularly contributed to TV Tech on topics related to media, networking, workflow, cloud and systemization for the media and entertainment industry. He is a SMPTE Fellow with more than 50 years of engineering and managerial experience in commercial TV and radio broadcasting. For over 25 years he has written on featured topics in TV Tech magazine—penning the magazine’s “Storage and Media Technologies” and “Cloudspotter’s Journal” columns.