The future of imaging technology

Since its broadcast camera introduction in 1987, CCD technology has been used in nearly all of the typical broadcast applications. Most of today’s camera systems that are used for these applications are still based on three 2/3in CCD imagers. This imager size, in combination with the RGB prism beam-splitter technology, has been the de facto standard for more than 25 years. Even when the SD cameras were replaced by HD cameras, which offer more than four times the pixel count, this standard has not changed.

There are good reasons for this: Some are economical, while others are based on technology and physical factors. For instance, a 1920 x 1080 2/3in HD imager has a pixel size of 5µm x 5µm, which is approximately four times smaller when compared to an SD imager. That is why the first generation of HD cameras were approximately 1 to 1.5 f-stops less sensitive than the SD cameras, while also providing an approximate 6dB to 9dB lower S/N ratio. This gap has been closed thanks to improvements in imaging technology, combined with the implementation of digital noise reduction systems.

The latest HD cameras now offer sensitivity and S/N ratios that compare closely to the last generation of SD cameras from more than 10 years ago. However, this is only true of the 1080i and 720p formats. Changing to any of the 1080p formats will lower the sensitivity of the CCD camera by 1 f-stop, or 6dB, again. Why is that so? CCD imagers have always had the advantage that the signal charges from two adjacent pixels could be added to each other in the vertical shift register. That means a CCD imager that reads out an interlaced format has an improved sensitivity of 6dB, compared to a full progressive read out.

Today’s interlaced formats are now only used for broadcast applications, and they will soon be replaced by progressive formats. The demand for 1080p, 4K and even 8K production is increasing. They are all progressive formats, and the improvements in the interlaced formats of the CCD imagers will no longer work.

CMOS

In CMOS imagers, the signal charges are converted inside the pixel into a signal voltage. Therefore, they must always work in a progressive mode as they cannot add signal charges from two adjacent pixels to one another. If needed, the interlace formats can be generated from inside the camera signal processing using the full progressive signals from the imager. A camera with a CMOS imager will have an identical sensitivity in the interlaced and progressive formats. This is one of the reasons why CMOS technology has fully replaced CCD technology in all applications other than broadcast.

In the past, CMOS imaging technology was not accepted for broadcast applications because of its performance when reacting to fast movements and light flashes — the so-called “rolling shutter” effect. This was caused by each pixel taking a slightly different start and end in terms of exposure time. This problem has been solved in the latest CMOS imagers by adding a storage node inside every pixel. They now react to fast movements and light flashes identically to CCD imagers with their “global shutters.”

Limiting factors

So how will this affect new formats such as 1080p, 4K and 8K? As previously explained, with the 1080p format, CMOS imagers offer a solution that not only provides the same imager size, but also a sensitivity and S/N ratio that is at least identical to what the best CCD cameras can achieve only in the 1080i formats. Therefore, there is no need to change the imager’s size or the principle of the RGB prism beam-splitter technology when going from 1080i to 1080p production. One advantage of this is that all the current 2/3in HD lenses, which are available in large numbers and in a wide range of sizes and zoom factors, can be used without any limitations. This is obviously not the case if a format with a higher pixel count (such as 4K) has to be produced.

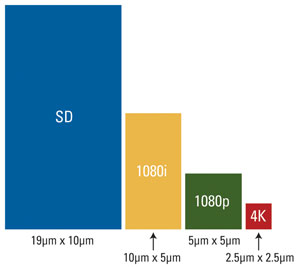

Figure 1. For a 2/3in imager, pixel size decreases as resolution increases, diminishing sensitivity and limiting saturation levels.

4K requires double the amount of pixels in both a horizontal and vertical direction. If they are put inside a 2/3in imager, the size of each pixel would be reduced from 5µm x 5µm to 2.5µm x 2.5µm (or in other words, the size of the pixel would be four times smaller). (See Figure 1.) Because of the smaller pixel size, the sensitivity would be at least four times lower too, which is not acceptable for most applications. The smaller pixel size would also limit the saturation level, which directly influences the dynamic range of cameras.

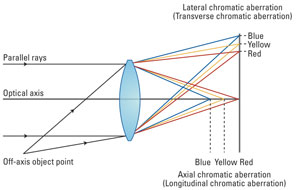

Figure 2. There are two kinds of chromatic lens aberrations: axial (longitudinal) and lateral (transverse). Axial aberration produces different optiumum focal points depending on the wavelength of the light. Nothing can be done on the camera side to reduce this effect; the lens must be optimized to reduce it. Lateral aberration manifests in color fringing on the sides of an image. This effect can be corrected if the camera knows the amount of error at the actual lens position.

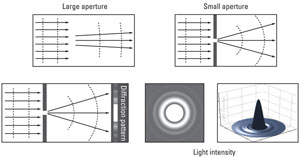

The camera manufacturing industry has taken more than a decade to compensate for the comparatively lower sensitivity of the smaller HD pixels and a similar time frame may be required to compensate for the smaller pixel size of a 4K camera. There are other limiting factors relating to the resolution and sharpness of the lens. The first is caused by the different aberration effects, which are mainly visible at lower f-stops, while the second is caused by the diffraction effects, which are mainly visible at higher f-stops. (See Figures 2 and 3.) As the pixels become smaller, the resolution demand of the lens becomes greater, and the aberration and diffraction effects could limit the usable iris range to an unacceptable level.

Figure 3. Diffraction effects are seen mainly at greater f-stops. As pixels on an imager become smaller, the resolution demand of the lens becomes greater. Aberration and diffraction effects could limit the useable iris range.

So what happens if the pixel size remains (approximately) 5µm x 5µm, but the imagers increase in size? Although it would not generate the same limitations in terms of sensitivity, the larger imager size requires the lens to face the same angle to produce a larger focal length, which reduces the depth of field. This is an effect that many broadcasters want to use for cinematography applications, but it is not necessary or even desirable in many instances, especially around live sports and entertainment productions. There are other questions to address in terms of the necessary size, weight and cost of these lenses, and the maximum zoom range that can be achieved. The cost of the three large imagers and the much larger prism beam-splitter could also prove limiting factors in terms of a full 4K RGB camera system.

4K today

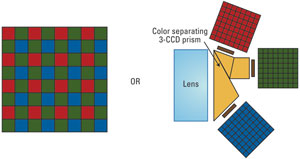

The 4K cameras available today for high-end applications have been based on the design of a large (CMOS) single imager, where a color filter in front of the pixels (which in most cases is a Bayer pattern filter) is used. (See Figure 4.) With the Bayer pattern filter, half of the pixels are used for the green part of the light, and a quarter of the pixels are used for each of the red and blue parts of the light.

Figure 4. Single CMOS imager cameras use a Bayer pattern filter (left) in most cases as compared to a beam-splitter system as used by 3-CCD or 3-CMOS imager cameras. For the single-imager cameras to obtain a resolution comparable to the 3-CCD or 3-CMOS cameras, it would need more, smaller pixels or a larger imager. Neither option is optimal for broadcast cameras for reasons of noise, sensitivity, diffraction effects and compatibility with the 2/3in lens mount standard.

A full-quality 4K RGB signal cannot be achieved with this type of camera system as it would require a far higher pixel count, and the imager size would need to be even larger. Nevertheless, these camera systems are an acceptable compromise for cinema-style shooting and deliver good performance with the prime lenses and the limited-range zoom lenses, which are available for the larger imagers.

Can the current single imager for 4K cameras adapt for live broadcast applications? Can broadcasters compromise by using a camera with three smaller full 4K RGB imagers? Or will another solution deliver a superior result? The only thing that seems to be certain at this stage is that the future of cameras will be based on CMOS imaging technology. What the best imager size is, and what is the best color separation system for these cameras will be, still needs to be defined. It’s an interesting challenge that will need to be addressed by camera manufacturers and users around the world over the coming years.

—Klaus Weber is Director of Product Marketing Imaging, Grass Valley.

Get the TV Tech Newsletter

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.