The Role of Real-Time Processors in Loudness Metering and Correction

As a practical matter— to ensure compliance with the CALM Act or to produce deliverables to a specific loudness target—stations and content-producing facilities are relying more on loudness processors to automatically make adjustments to the audio content.

There are two broad categories of loudness processors: real-time and file-based. Within each type, loudness processing can be a stand-alone function or incorporated as part of a total processing package.

Real-time loudness processors operate in nearly real time (with some buffering and processing delay) to meter loudness of an incoming signal (mono or multichannel) and then typically continuously make audio level adjustments depending on the rules or presets it is given.

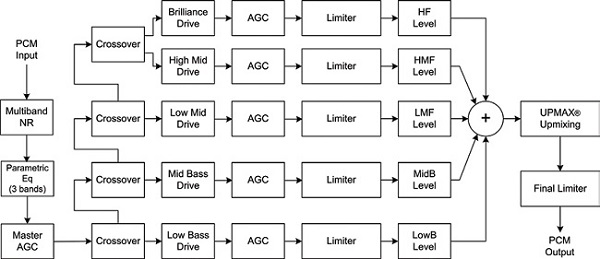

Fig. 1: General signal flow of a multiband real-time loudness processor, Linear Acoustic Aeromax

A file-based loudness processor, on the other hand, analyzes loudness from audio that was recorded as a digital file, typically as part of a video file. The analysis occurs over the entire length of an audio piece and then a scaling factor (gain or attenuation), if needed, is applied to the entire content, based on preset rules, so that the audio output is delivered at that new (target) loudness level.

REAL-TIME PROCESSORS

While they can be used for treating loudness on archival material on tape (instead of in a digital format), real-time processors are typically installed in the last stage of the audio signal chain, before an encoder, to catch any loudness problems that would make the program non-compliant.

A real-time processor fixes loudness “by adjusting dynamic range,” said Tim Carroll, Telos Alliance CTO and Linear Acoustic founder.

The processor continuously measures program loudness of the input audio signal according to the ITU-R BS.1770 standard (currently version 3, from August 2012). Then automatic gain control, or in other words, compression, is applied to adjust the level to meet the target. Think of this as riding gain with an audio fader.

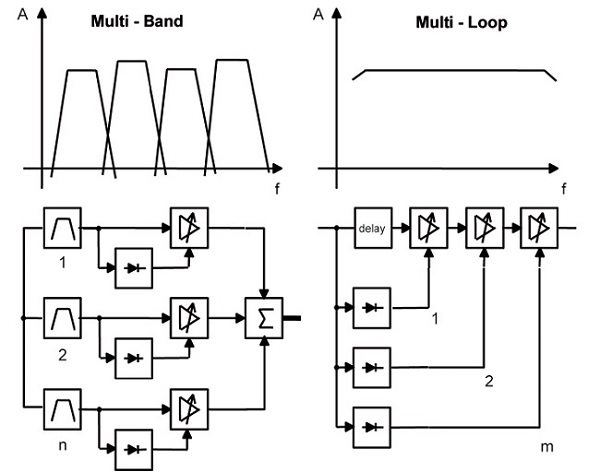

Fig. 2: Comparison of multiband (left) and wideband multiloop (right) block diagrams for real-time loudness processing

“The [processor] is constantly adjusting the level,” said Peter Pörs, managing director, Jünger Audio GmbH. “The fader is never in a fixed state. It’s moving so slowly that you don’t perceive it.”

For content at relatively controlled levels, adjusting the AGC too fast will be audible, yet if a loud transient occurs, the processor must be able to react quickly to pull it down. This is why processors typically have an output limiting stage.

WIDEBAND AND MULTIBAND PROCESSING

Processors differ in how the AGC is applied. Some apply AGC across the entire audio spectrum (wideband). Others use the multiband approach where they break up the full audio spectrum into sections or bands and apply AGC individually to each band. Different attack and release times can be set for each band.

According to Carroll, if the processor runs in straight wideband this, in general, can make processing adjustments more audible. As an example, a loud thump could bring down the level of a whole program, even though it’s only the lower frequencies that caused the level to spike. With multiband processing in this scenario, only the lower frequencies would be reduced, (Fig. 1).

Four or five bands generally are adequate for multiband loudness processors, according to Carroll, and this is what is typical.

Another idea behind multiband processing is the way us humans perceive sound. We don’t hear linearly across the audible frequency range. We are more sensitive to mid-range sounds compared with those of higher and lower frequencies, but the difference changes as the audio level changes.

For example, for normal hearing at low audio levels, a sound at 100 Hz must be raised about 15 dB higher than one at 1,000 Hz for the two tones to be perceived as equally loud. As audio levels increase, the lower frequencies don’t need to be raised quite that much compared to the mid-range to be perceived as equally loud.

Two researchers from Bell Labs, Harvey C. Fletcher and Wilden A. Munson, studied this phenomenon and in the early 1930s published their results with graphs of equal loudness curves across the audio spectrum and at different audio levels. These have come to be known, not surprisingly, as the “Fletcher-Munson curves.”

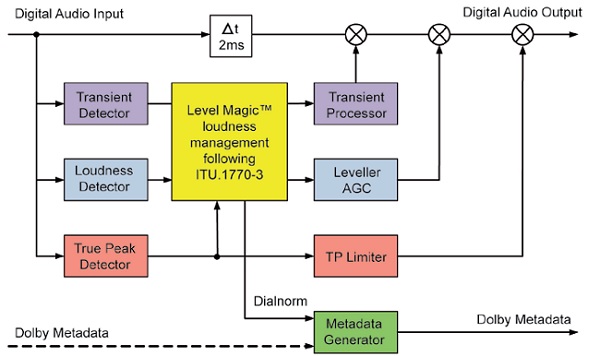

Fig. 3: Block diagram of Jünger Audio Level Magic loudness management

That’s why if you compress the entire signal you change the relative levels of the high and low frequencies to the mid-range, and that, according to Bob Nicholas, director of international business development for Cobalt Digital, changes the character of the sound. This can have a negative effect on intelligibility.

“Multiband is not trying to keep things spectrally flat, but to keep things spectrally balanced,” Carroll said.

Nicholas said that multiband AGC is more applicable to a sound source that’s a mix of different signals and wideband is more for single source signals, like that used on a channel strip of an audio console.

Taking a different tack, Pörs said that the multiband approach can produce anomalies when the different frequency bands are summed together. “The possible difficulty is that [with] the overlapping zones of the filters, a precise summation of the signals is nearly impossible [and that] leads to coloration,” he said. “That’s why we came to wideband.” (See Fig. 2.)

Wideband with a twist, that is. “We have a different processing design approach,” Pörs said. “We call it multiloop design.” This design incorporates a series of gain controls, with each “fader” controlled separately. (See Fig. 3.)

“The various loops each work over the entire frequency spectrum,” Pörs said. “They work in parallel, each with a different set of attack and release parameters. Each loop develops a control signal which is then summed with the controls from the other loops to produce a single gain control signal applied to one gain control element.”

The algorithms in the processor provide automatic adjustment of the attack and release time based on how the input signal changes over time. “This is called ‘adaptive dynamic range control,’” Pörs said. “By monitoring the waveform of the incoming audio, the system can set relatively long attack times during steady-state signal conditions, but very short attack times when there are impulsive transients.”

In addition, the Jünger multiloop design allows for a very short time delay to be put in the audio signal path. “This lets the gain-changing elements ‘look ahead’ and determine the correction needed and to apply it to the delayed signal just in time to control even the fastest transients,” Pörs said.

No matter what design, it must be set and used correctly. More on this later.

Mary C. Gruszka is a systems design engineer and consultant based in New York. She can be reached via TV Technology.

Get the TV Tech Newsletter

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.