Trusting Data Integrity

How many times have you written a file to a directory only to find out, sometimes much later, it was corrupted, lost or improperly archived? None of us are likely immune to this phenomenon and few are aware of how this might be prevented.

The accuracy, quality and consistency of data—regardless of where it is stored—is of immense importance; yet data integrity is often taken for granted by most users. Irrespective of where your data is stored—whether in a warehouse, data mart or some other construct, including the cloud—guaranteeing data integrity may be the most vital parameter in the entire compute chain.

Data integrity describes a state, a process or a function. It is often used as a proxy for what is sometimes referred to as “data quality.” Data integrity is routinely equated with “databases,” as in “ensuring database data integrity” but it can a mean a lot more, especially when referring to the many actions that might or can occur in manipulating files (data) through various workflows and processes.

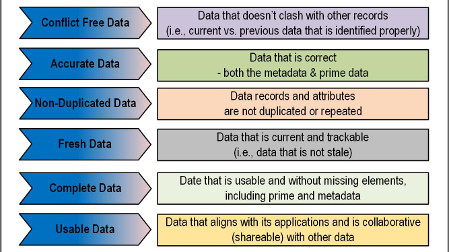

Fig. 1: Trusted values for data integrity.

Data integrity is warranted whenever the original data is modified, as in the editing, copying or transferring processes. Another concern is when the data is backed up or archived. The methods practiced by software and hardware storage solution providers need to be “transparently trusted” so that errors or losses of data are prevented. When or if data errors are detected, they should essentially be unnoticed (i.e., “transparent”) and never impact the results of when that data is used for computational, display or delivery purposes.

TRUSTED DATA

Data integrity infers that the data is trusted; that is, the data properties are trustworthy from a technical perspective (Fig. 1). Data must be trusted by the consumer of the data using reliable reports on the data’s state or status, as well as with the applications that will use the data.

Trusted data includes such attributes as “complete data.” This is achieved when the data integration technologies and techniques produce a consolidated data structure. The term “enterprise data warehouse” (EDW)—a term applied well ahead of today’s “cloud services” world—is where organizations could place their data (near term, short term or deep/long term), as a secondary service to on-premises (“on-prem”) storage. Respected and trusted EDWs should be competent enough to provide its customers with a full 360-degree view of users’ data with a historic context of all the real-time data activities. Cloud storage services have effectively replaced the legendary EDWs; with functions and expectations that are essentially the same.

STALE OF CURRENT

Trusted data should be “current data.” Users should be able to query the data system to understand questions such as “how old is the data” via a report?” Trustworthy data is “fresh,” whereas “stale data” is not. Stale data can be contaminated by successive read/write processes (where data is copied or relocated during defragmentation) or by “bit rot,” the slow deterioration in performance or integrity due to issues with the storage medium itself.

Existing data may be corrupted when there is considerable activity (multiple seeks, reads and/or rewrites) on the actual disk platter or across the physical tape medium due to successive passes and/or writes. On optical drives, if the physical areas of the disc where the current data resides, has been untouched (unwritten to) for considerable time and then the laser writes to an adjacent area (or surrounding tracks); there are opportunities to blur or distort the existing pits making your existing data unrecognizable or questionable.

CONSISTENT AND CLEAN DATA

Another data trust factor deals with supporting the other (i.e., meta) data associated with the main “core” data. Properly maintained metadata management and master data management practices help to ensure that the data you need can be searched, found and retrieved on a consistent and secure basis. Good metadata management includes documenting the data’s origins and meanings, in a reliable and coherent methodology.

Employing data quality techniques is critical to obtaining “clean data.” Typically, this is obtained by following standardizations in data management, using data verification and matching techniques, and using data deduplication. To maintain operational excellence and to make quality decisions, your data must be clean.

Since data activities often include the aggregation of one data set with other data sets as data traverses workflows, or when data travels across multiple IT systems; it is important to know and trust that the data is technically sound and consistent across the enterprise.

COMPLIANCE AND COLLABORATION

Ensuring you have “regulation-compliant data” is another element of a trusted data scenario. Regulations associated with data compliance come from sources which may be external to your organization, as in federal legislation or your partner connections. They may also come from internal policies such as your own internal IT data architectures, quality assurance, security and privacy. Businesses need to trust that their data has been accessed and distributed in accordance with both external and internal guidelines.

Data “collaboration” (also referred to as data “sharing”) is essential to business functionality. Collaboration helps to ensure a strong alignment between data management practices and business management goals. When successful, data collaboration improves trust amongst inter-departmental activities and functions.

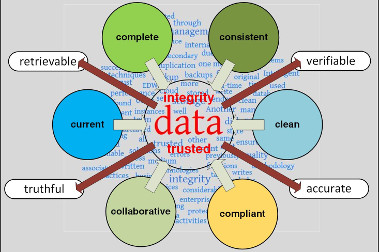

Fig. 2: Data quality monitoring, attributes to data integrity.

SOURCE TO DATA INTEGRITY

Data integrity needs to be maximized from the data source to the application and through to the storage medium (disk, tape, optical or cloud). Data integrity (and protection) is made possible by applying multiple mechanisms during the write processes, modification processes and through the management of duplicated data throughout the system (Fig. 2).

One methodology is to ensure that when original data, stored for example on a disk drive is modified, that the results will always be written to unused blocks on that disk. This practice assures that the old (previously unmodified) data is unaffected on the disk, even if the new data written to the other location is corrupt. Through tracking history, if one data write is found to be in error, you could fall back to a previously written block and recover either the same (or a previous) version of the disk.

Another level of data insurance, the “snap shot”—from the photography term—is the capture (recording) of the state of a system at a particular point in time. Besides protection, this read-only snap shot of data can also be used to avoid downtime. In high-availability systems, a data backup may be performed directly from the snapshot. This method lets applications continue writing to the main data while a duplicate “snap shot” of data becomes that data, which is backed up to another storage resource.

There are many secondary (provided by the storage vendor) and third-party (non-storage vendor) software solutions that can manage both snap shots and backups in real-time.

INTELLIGENT BACKUP

As expected, more organizations are now considering modern intelligent backup practices to protect their data. This means more storage is consumed and that more data system management will be necessary. Intelligent backup solutions may utilize a practice called “deduplication,” which finds all the instances of any data set, establishes pointers to where all those instances occurred relative to the data backup, and then narrows the amount of data down to only a single set of data. Deduplication reduces storage costs, but also increases performance of data backups and restores.

Data “dedupe” (its shortened name) can be used on main data (e.g., in databases or on transactional data sets) or for backups or archives. However, due to the already highly compressed nature of video along with the unstructured nature of motion imagining data, deduplication does not improve the storage performance in the same was it would for structured data.

Data management technologies, monitoring, and practices continue to evolve. How data is managed on a solid-state storage medium (SSD) versus a magnetic spinning disk or optical disc can vary. Users looking into new archive platforms or backup solutions should absolutely get the full story on how the vendor handles data management, data integrity and resiliency—before making a selection that they will live with for years to come.

Karl Paulsen is CTO at Diversified (www.diversifiedus.com) and a SMPTE Fellow. Read more about this and other storage topics in his book “Moving Media Storage Technologies.” Contact Karl at kpaulsen@diversifiedus.com.

Get the TV Tech Newsletter

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

Karl Paulsen recently retired as a CTO and has regularly contributed to TV Tech on topics related to media, networking, workflow, cloud and systemization for the media and entertainment industry. He is a SMPTE Fellow with more than 50 years of engineering and managerial experience in commercial TV and radio broadcasting. For over 25 years he has written on featured topics in TV Tech magazine—penning the magazine’s “Storage and Media Technologies” and “Cloudspotter’s Journal” columns.