TV sync and timing

Because television started as an analog medium, timing has always been a prerequisite for combining or switching pictures. The cameras that RCA deployed at the 1939 World's Fair scanned in perfect synchronization with the beam scanning the CRTs in the displays across the river. Cameras, switchers and film chains had sync inputs that effectively guaranteed that the composite program could be delivered without interrupting the timing of the beam in the consumer display.

In the 72 years since that happened, it is quite remarkable that nearly the same sync system is in place. While color burst has been added to make color black into a reference with much higher precision, fundamentally the same horizontal and vertical sync used in early commercial television exist today. As our needs for more complex systems and the timing needs of digital video and digital audio systems have evolved, we have found ways to keep the legacy system in place while facilitating multiple frame rates, progressive scan, AES and compressed audio systems, and compression systems.

What's different is that in some ways we have less precision today. It is often said that buffers change everything, and digital systems don't require the kind of rigid precision that the color subcarrier in the NTSC (or PAL) system require. Think about that for a moment; we used to time signals to subnanosecond precision so that errors in color phase would not accumulate. With digital switchers, timing to plus or minus a full line, or more, is sufficient. That is because of buffers built into the switcher, buffers large enough to allow in some cases nonsynchronous signals to be handled freely. This does not remove the need to carefully design systems to avoid issues that poor timing creates. Routing switchers don't have buffers to effectively retime the output with mistimed inputs. When switching (on line 10 in HD systems normally), one cannot automatically correct when the second signal is lines away from correct timing, causing potential processing errors in downstream devices.

In analog systems, there was no requirement to “time” audio to video. Unless you put delay into the video path, audio processing had so little latency that it was hard to make lip sync problems happen. As soon as you digitize audio and add audio processing — such as embedders, sample rate converters, or any device with local buffers or storage — the need to manage audio sync becomes just as critical as video issues. There are, however, some inconvenient facts about syncing audio. Our need to use 59.97Hz as the vertical frequency gives rise to a disparity in syncing 48kHz audio tracks with video. There is a five-frame cadence to the matching of audio access units and video frames. In addition, there is a definitive need to lock both AES reference and video reference to the same clock. Most modern sync generators will do that, but the need for AES reference is sometimes forgotten when system designs are drafted in-house without careful thought (and perhaps a lack of experience).

With all of the emphasis on audio sync these days, it is also wise to be careful about the reference signals to audio and video encoders. In addition, equal care should be taken in setting offsets in Dolby and MPEG-2 encoders. A good practice is to lock the master sync generator to GPS, which gives both a stable and accurate frequency reference, but also allows time code to be derived with higher precision. Time can be referenced in some software-based products to NTP time servers, but GPS is preferred if it is available.

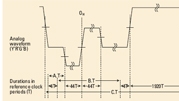

With HD systems there is often a need for trilevel sync. (See Figure 1.) TLS is defined in SMPTE 240M (1035-line HD), SMPTE 274M (1080-line HD) and SMPTE 296M (720-line HD). It is used by a relatively small number of devices, but often is critical in HD systems.

At one time it was thought that TLS would provide a replacement for color black in developing HDTV systems. That has not turned out to be true, in part because digital systems seem to work just fine with existing reference signals. Also some manufacturers, to remain nameless here, worked against having new reference signals for many years, successfully I might add.

A new reference signal

There is now work (well advanced) on a new reference signal that would provide rich information as well as a timing reference for all signals without regard to frame rate or sampling structure. The premise is by using GPS as a frequency standard and by defining an “epoch” when all signals are deemed to “start.” All HD, SD, audio, MPEG and other reference signals start at that epoch, and by using math that is easily derived, one can calculate the exact state of each signal at any future time. For instance, using the 5MHz GPS reference, one could derive the NTSC color subcarrier frequency by using the following formula:

fsc = 63/88 × 5MHz

or

fsc = (32 × 7)/(23 × 11) × 5MHz

If the subcarrier starts positive at the epoch, you can calculate the state of an NTSC signal accurately by knowing the current time, which is the reason GPS is used.

Though not obvious, one need not distribute an actual 5MHz reference to make this a workable scheme. You can use a network to send the time accurately as a time stamp, much as MPEG clocks are sent as time stamps. If the latency of the network is known, or can be discovered, any reference signal could be generated by using a digital phase-locked loop to establish the 5MHz reference from the time stamp and then use simple arithmetic to get the current signal status. Though not yet in use, this approach could lead to precise references for all manner of devices that generate or use video with only network cabling required. As a bonus, accurate time signals are generated as well, which makes the use of existing time code seem a bit anachronistic.

Time sponges

Lastly, our industry would not be as simple as it is without “time sponges.” Frame synchronizers, which used to include as a class “time base correctors,” allow free running sources to be retimed into any system. Today they offer a multitude of other features like embedding audio, shuffling tracks, down- and crossconversion, and proc amp-like controls. They seem to be a sort of Swiss Army knife for television content. When dealing with satellite and terrestrial feeds, there is no better tool. They solve the timing problem and allow resyncing audio in many cases. I suspect we will see these capabilities built into many more devices over time. Much of the capability is simple today with software-defined devices.

John Luff is a broadcast technology consultant.

Send questions and comments to: john.luff@penton.com

Get the TV Tech Newsletter

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.