Uncovering the Long-Term Cloud Archive Equation

Deciding, for the long run, where to keep an organization’s media-centric assets (i.e., original content, EDLs, finished masters, copies, versions, releases, etc.,) is reshaping the ways of storing or archiving data. Continued popularity in cloud-based solutions for ingest/content collection, playout from the cloud and processing in a virtual environment leads many to rethink “storage in the cloud.”

Yet cloud concerns still leave the door open to storage alternatives, tier-based migration, automated management and more.

What are the possible alternatives: Backup or archive? On-prem or in a co-lo? Private, hybrid or public cloud? Knowing the differences could create changes in how to approach archive management, regardless of the size, location or types of data libraries.

BACK IT UP OR ARCHIVE IT

Let’s look first at the differences in backup vs. archive.

Backup is a duplicate copy of data used to restore lost or corrupted data in the event of unexpected damage or catastrophic loss. By definition, all original data is retained even after a backup is created; original data is seldom deleted. Most backup just in case something happens to the original version, while for others, it is a routine process mandated either by policy or because they’ve previously suffered through a data disaster and pledge never to live through that again.

On a small scale, for a local workstation or laptop, practice suggests a nightly copy of the computer’s data be created to another storage medium, e.g., a NAS or portable 4-8 TB drive. Travel makes this difficult, so alternative online solutions prevail.

Businesses routinely backup their file servers (as unstructured data) and their databases (as structured data) as a precaution against a short-term issue with data on a local drive being corrupted. “Snap-shots” or “images” of an entire drive (OS, applications and data) are often suggested by administrators, software vendors, and portable hard drive manufacturers.

Incremental backups, whereby only new data or any which was changed since the last backup, are made due to the larger storage volumes and the time required for the full backup.

STORAGE AS A SERVICE

Archived data could be placed on a local NAS, transportable disk drive, an on-prem protected storage array partition, or linear data tape. Since an archive is about “putting the data on a shelf” (so to speak), the choices vary based on need.

Cloud archiving is about “storage as a service,” and is intended for long-term retention and preservation of data assets. Archives are where the data isn’t easily accessed and remains for a long uninterrupted time.

Archiving used to mean pushing data to a digital linear tape (DLT) drive and shipping those tapes to an “iron mountain-like” storage vault. In this model, recovering any needed data was a lengthy process involving retrieving the information from a vault, copying it to another tape, putting it onto a truck and returning the tape to the mothership where it was then copied back onto local storage, indexed against a database (MAM), and then made available on a NAS or SAN.

This method involves risks, including the loss or damage to tapes in transport, tapes which went bad or possibly had corrupted data onto the tape in the first place. Obsolescence of either the tape media or the actual drives meant that every few years a refresh of the data tapes was required, adding more risk in data corruption or other unknowns.

As technology moved onward, robotic tape libraries pushed the processes to creating two (tape) copies—one for the on-prem applications and one to place safely in a vault under a mountain somewhere. While this reduced some risks—such as placing duplicate copies in storage at diverse locations—it didn’t eliminate the “refresh cycle” and meant additional handling (shipping back tapes in the vault for updating). Refresh always added costs: tape library management, refresh cycle transport costs, tape updates including drives and physical media, plus the labor to perform those migrations and updates.

STORAGE OVERLOAD

Images keep getting larger. Formats above HD are now common, quality is improving, pushing “native” format editing (true 4K and 5K) upwards, added to dozens to hundreds more releases per program and keeping storage archive equations in continual check. As files get larger, the amount of physical media needed to store a master, such as protect copies and one or more archive copies of every file, is causing “storage overload.” Decisions on what to archive are balanced against costs and the unpredictable reality that the content may never need to be accessed again.

High-capacity, on-prem storage vaults can only grow so large—a hardware refresh on thousands of hard drives every couple years can be overwhelming from a cost and labor perspective. Object-based storage is solving some of those space or protection issues but having all your organization’s prime asset “eggs” in one basket is risky and not very smart business. So opens the door to cloud-based archiving.

MANY SHAPES AND SIZES

Fee-based products such as iCloud, Carbonite, Dropbox and such are good for some. These products, while cloud based, have varying schemes and work well for many users or businesses. Private iPhone users get Apple iCloud almost cost-free but with limited storage sizes. Other users prefer interfaces specifically for a drive or computer device with an unlimited storage or file count.

So why to pick one service over another? Is one a better long term solution versus another? Do we really want an archive or a readily accessible “copy” of the data in case of an HDD crash?

One common denominator to most commercial “backup/archive” services is they keep your data in “the cloud.” Data is generally accessible from any location with an internet connection and is replicated in at least three locations. However, getting your data back (from a less costly archive) has a number of cost-and non-cost-based perspectives. Recovering a few files or photos is relatively straightforward but getting “gigabytes” of files back is another question. So beware of what you sign up for and know what you’re paying for and why.

Beyond these common points is where the divisions of capabilities, accessibility, cost, serviceability, and reliability become key production indicators which in turn drive differing uses or applications.

ACCESS FROM THE CLOUD

Cloud-stored data costs have a direct relationship to the accessibility and retrieving of that data.

If you rarely need the data and can afford to wait a dozen hours or more for the recovery—then choose a “deep-storage” or “cold storage” solution. If you simply need more physical storage and intend regular daily or weekly access—then you select a “near term” (probably not an archive) storage solution. Options include On-Prem (limited storage, rapid accessibility) or off-prem in a Co-Located (Co-Lo) storage environment, referred to as a “private-cloud” or alternatively a “public” (or commercial) cloud provider such as Google, Azure, AWS, IBM or others.

Cloud services continue to grow in usage and popularity, yet, there remains a degree of confusion as to “which kind of cloud service” to deploy and for which “kinds of assets.” Many users prefer regular access to their “archived” material—this would be a wrong approach and is more costly (as much as 4:1) than putting their data into deep-or cold-storage vs. a short-term environment (“a temporary storage bucket”) that is easily accessible.

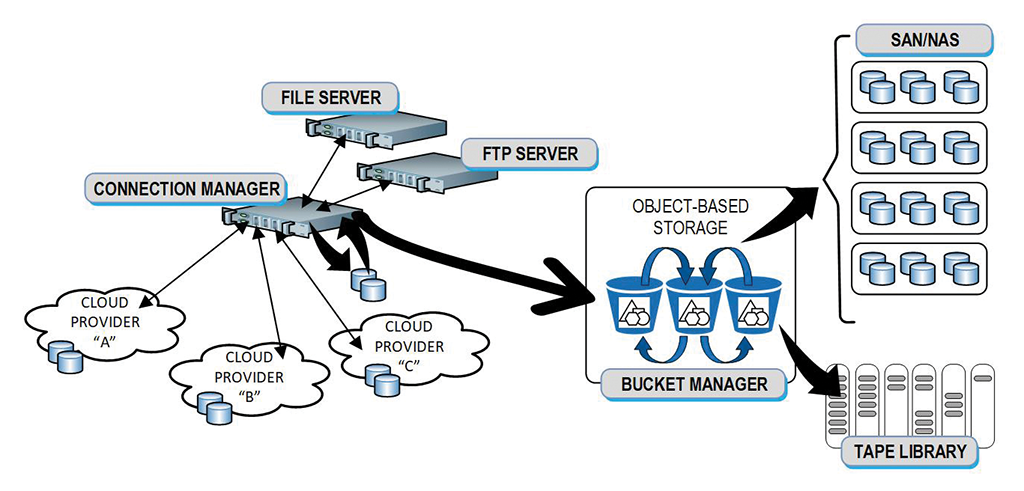

Fig. 1 shows a “hybrid” managed solution with local cache, multiple cloud storage providers, and local/on-prem primary archive serviced by an object-based storage “bucket” manager. The concept allows migration, protection, and even retention of existing storage subsystems.

CHOICES AND DECISIONS

Picking cloud service providers for your archive is no easy decision—comparisons in services and costs can be like selecting a gas or electricity provider. Plans change, sometimes often. Signing onto a deep archive becomes a long-term commitment due primarily to the cost of retrieving the data despite the initial ‘upload’ costs being much lower. If your workflows demand continual data migration, don’t pick a “deep” or “cold-storage” plan; look at another near-line solution. Be wary of long-term multi-year contracts—cloud vendors are very competitive, offering advantages that can adjust annually.

The amount of data you’re to store will continually grow. Be selective about the types of data stored, the duration you expect to keep that data, and choose wisely as to ‘what’ is really needed. Your organization’s policies may dictate “store everything,” so know what the legal implications are, if any.

Carefully look at the total cost of ownership (TCO) in the platform, then weigh the long-term vs. short-term model appropriately.

Karl Paulsen is CTO at Diversified and a SMPTE Fellow. He is a frequent contributor to TV Technology, focusing on emerging technologies and workflows for the industry. Contact Karl atkpaulsen@diversifiedus.com.

Get the TV Tech Newsletter

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

Karl Paulsen recently retired as a CTO and has regularly contributed to TV Tech on topics related to media, networking, workflow, cloud and systemization for the media and entertainment industry. He is a SMPTE Fellow with more than 50 years of engineering and managerial experience in commercial TV and radio broadcasting. For over 25 years he has written on featured topics in TV Tech magazine—penning the magazine’s “Storage and Media Technologies” and “Cloudspotter’s Journal” columns.