Use the Cloud or Build a Datacenter?

What is realistic to take to the cloud and what isn't?

The phrase “the future is now” can be no more reflective than when you consider the merging of the cloud with IP and virtualization. This Cloudspotter’s Journal looks at changes that are reachable and already on the horizon. The merging of IP (in various flavors) with virtualization, and their machines, are creating a new environment that yields suitability for cloud production, continuity playout or consolidation of operational resources.

With the continuing maturity of the latest IP standards for high bit rate—professional media on a managed network—comes the question “Could this be applicable to ‘the cloud’?” If so, just how would you sandwich 1.5 to 12 gigabits per second onto a public highway in an efficient and productive way? Today, this is likely an unsubstantiated perception; yet, there is certainly an assumption given that everything else is headed in that direction, so why not IP?

Such an assumption might be valid if you had an unlimited budget and you were physically parked next to Azure in Redmond with direct-to-cloud fiber connectivity that didn’t need to go any further. Even with that “pipe dream” the security issues and egress management alone would likely put a halt to that nonsense in a heartbeat. Pipe dreams aside, alternatives for high bit rate data movement and manipulation still need to be realized.

CLOUD ORIGINS

What if the cloud went back to what it originally started as? The actual cloud symbol—that puffy squashed circle-like icon—was just a representation of “the network” on a diagram (Fig. 1). The network could, at the time, be described as anything from a short-connected Ethernet segment with files moving on it to a full datacenter or anything in between. It might have been a campus-like topology infrastructure where resources were easily shared; that is, doled out, when or as needed and then reconfigured to serve other purposes once that “compute cycle” effort was completed.

Then consider how a “shared resource” model, as in a datacenter, would be analogous to the cloud. A shared resource model, when appropriately configured, is a cloud; with on-premise cloud versions functioning like a datacenter. Pools of resources are allocated as needed with a central management and monitoring platform overseeing operational functionality (sometimes called “orchestration”). How the services are efficiently and effectively abstracted from the resource pool becomes the challenge.

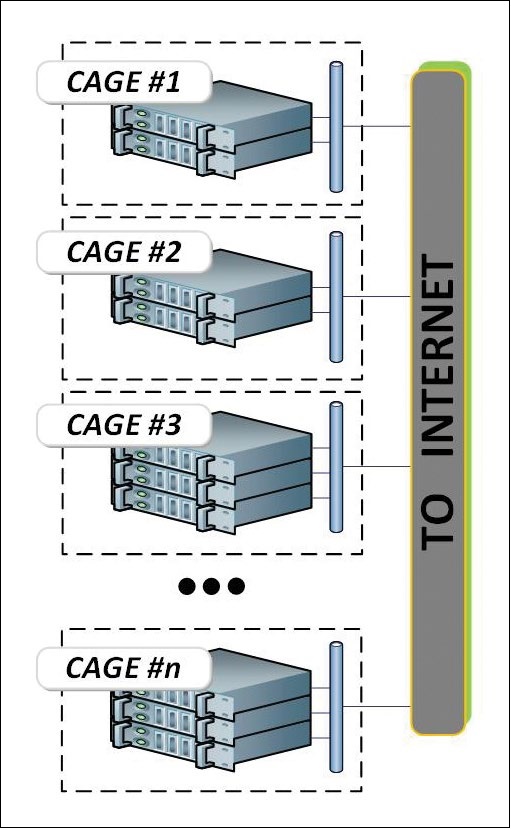

The “co-lo” (co-located) facility is similar, but they are not generally “on-prem located.” In this model, a datacenter-like structure houses several screened-off areas (i.e., private “cages”) where customers place their own equipment and connect it to the internet or a similar network topology (Fig. 2). Sometimes the co-lo equipment cages are managed independently and sometimes not. The co-lo is usually connected at a main internet point, whose point-of-presence (POP) is usually very close or actually co-located in the facility.

Nonetheless, the co-lo concept appears a bit like a cloud, without the customary sharing of resources found in a dedicated pooled resource environment.

Co-lo datacenters usually service many customers. However, they only become a true “cloud” if the individual sets of components (from the individual customers in their own cages) can leverage one another, pooling their collective resources into a managed, coherent system of compute resources. This doesn’t happen very often, unless it is a campus research center where the cages are interconnected to/from a central processing core that can allocate and then reallocate their functionality to serve varying purposes.

Co-lo models have been applied to broadcast operations, such as in Jacksonville, Fla., where a datacenter approach first served Florida Public Broadcasters and then later added customers from outside the Florida region. This was known in its early days as “centralcasting” and was adapted to yield the “hub-and-spoke” concept for consolidation of television station playout and content ingest. The model was extended to differing formats, but it is essentially a cloud-like central facility, which sometimes shares its resources in a virtual environment and sometimes houses discrete components assigned to specific distant station markets but managed (i.e., controlled) from a single location.

SHARING THE RESOURCES

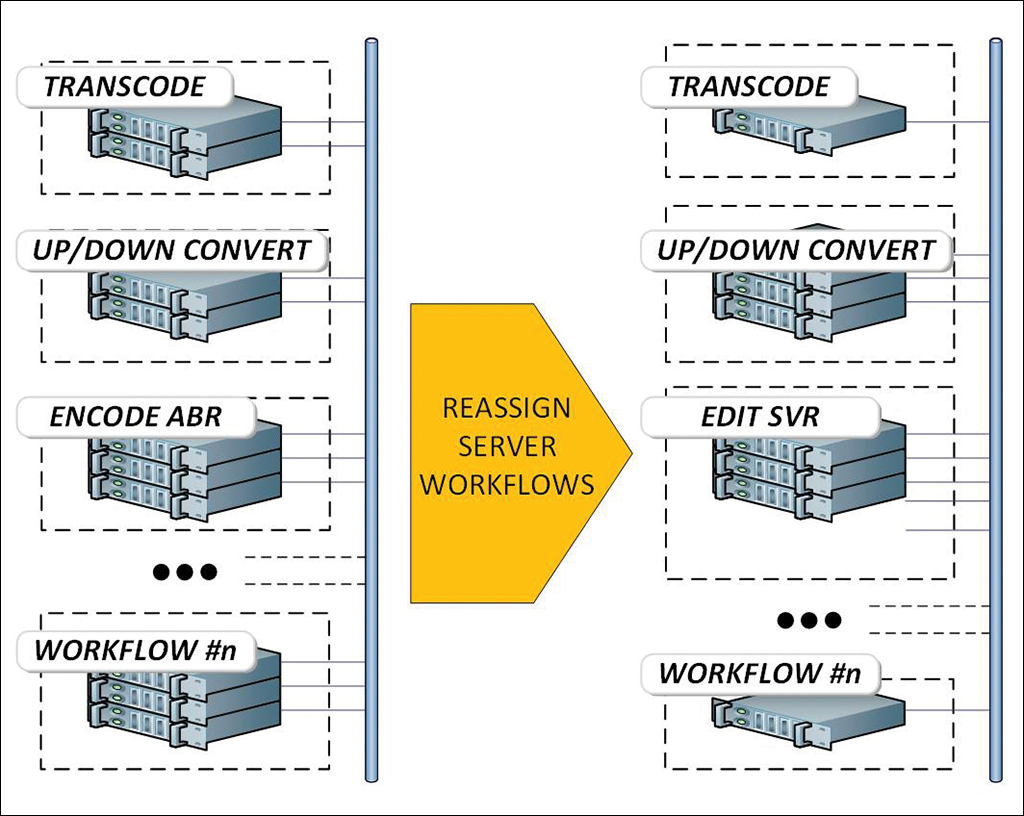

Shared-resource private-cloud functionality, as found in current and emerging environments, seems better served when the physical architecture of the supporting components (switches, servers and compute topologies) is virtualized to support multiple functions depending upon the day-part needs for that playout region, city or operations center. Such capabilities, promoted by broadcast equipment manufacturers as products that were once built into application-specific and dedicated functionality (e.g., an up/down/cross converter, graphics generating device, render engine) are moved away from purpose-built hardware to software-based products landed on universal “pizza-box” servers.

As FPGAs and other compute devices (such as GPUs and multi-core CPUs) evolve technically, manufacturers are likely to move further away from single purpose black boxes to a shared services model on COTS equipment. Manufacturers already recognize the capability to port functionality into a series of blade processor servers, or such. This model is becoming more viable to end user’s applications (Fig. #3), where a server ‘pool’ is repurposed depending upon facility needs).

For traditional facilities, where the hardware gets only about a 25-35 percent average utilization factor, the concept is to move from a ‘dedicated’ (per device) model to a ‘shared’ (i.e., pooled) environment. The model is now considered virtualized, with the devices (the bare metal COTS servers) becoming multifunctional through a software defined architecture. In the ‘cloud-like’ model, the functionality of a pool of shared compute engines, for example, can be spun up to transcode for a portion of the day and then spundown, reconfigured (in software) and spun-back-up to do another function perhaps as a format converter or MAM processing engine at another time. As more content is generated in a single cloud, the model then expands to provide services to multiple channels (distribution points) and thus, the cost-per-channel goes down considerably.

FULL FUNCTION FLEXIBILITY

Media organizations are now starting to head in the direction of cloud-flexibility and in mixed locations such as on-prem, hybrid and private or public cloud. While in the past, functions were optimized for compressed workflows (e.g., DNxHD, XDCam, AVI formats), the capabilities of high-speed NICs and faster processors have enabled workflows in full resolution, uncompressed formats. Some systems now coming online are utilizing the recent SMPTE ST 2110 IP studio production standards for formats from HD (1.5 Gbps) to UHD (4K) at upwards of 12 Gbps.

Returning to an earlier discussion, a cloud utilization model now makes practical sense whereby owners can create an on-premises, cloud-like architecture that supports full bandwidth (HD/3G or UHD) and can serve many entities locally and afar. Here processing is done in full bandwidth and then distributed (as completed work) in a compressed format suitable for air or CDN/distribution. This spares each local facility from building up services that take up expensive real estate, yet only get used a fraction of the time.

Extending these capabilities to full-spectrum, high-quality production capabilities that support end-to-end services is gaining momentum on a global scale. Remote (REMI or “at home”) productions are now carrying out field production needs that once required a fully equipped mobile (outside) broadcast unit and a crew of dozens to produce sports and entertainment. Outfitting the datacenter to share large sets of servers in a network topology can now raise the utilization factor manyfold, while reducing the overall capital expenditures (and labor costs) that were once required at every station or venue location.

Virtualized environments, whether on-prem or in the cloud, make a lot of sense as facilities consolidate locations and share their resources. Deploying in a virtualized world requires a few more additional learning curves: applications in SMPTE ST 2110 as networked IP, virtual machines (VM), and not to be left out, security. That will be the broadcast engineer/network technician’s future, and that future is now!

Karl Paulsen is CTO at Diversified and a SMPTE Fellow. He is a frequent contributor to TV Technology, focusing on emerging technologies and workflows for the industry. Contact Karl atkpaulsen@diversifiedus.com.

Get the TV Tech Newsletter

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

Karl Paulsen recently retired as a CTO and has regularly contributed to TV Tech on topics related to media, networking, workflow, cloud and systemization for the media and entertainment industry. He is a SMPTE Fellow with more than 50 years of engineering and managerial experience in commercial TV and radio broadcasting. For over 25 years he has written on featured topics in TV Tech magazine—penning the magazine’s “Storage and Media Technologies” and “Cloudspotter’s Journal” columns.