Virtualizing the Growth of Metadata

Karl Paulsen

With a growing need to retain all sorts of data applicable to media and entertainment content, where and how all the variances will be kept is a continuing question that storage vendors, enterprise media asset management providers and equipment manufacturers continue to address. Could a universal approach to storing all forms of data make life easier? The simple answer is “yes,” but the devil is always in the details. Understanding the foundation of the issues and how to manage growth is this month’s topic.

Experienced users are now taking the perspective that the metadata they collect about the content they manipulate is probably more valuable than the content itself. That is, if you just store content with a file name (unless you have a really intelligent file-naming schema); then the value of your content is only as good as the name attached to it.

In many cases that file name is pretty worthless, usually a broad name that looked good at the time (such as “KFC Shoot Day One”) plus an incremental number related only to the order in which it was captured, like ‘1620130415’. The cryptic decoder ring might help two weeks later when somebody else needs to find the file, but not likely. If the file name was originally generated by the imaging system (the camera), the name may never be conveyed into the storage system as it gets transposed to another meaningless number set. The file name gets another sequence number when it moves to the NLE; and the list continues.

A MAM or production asset management (PAM) can provide significantly more value to the data-identification dilemma. A PAM will generate its own number in its own database, applying it to the ingest set of content as each asset is “registered” into its system. The information is stored, as metadata, under a usually proprietary scheme and harvested during the editing or reviewing process.

Seldom can that metadata be exposed to other applications without a dedicated API that must be developed and interfaced specifically between the originating PAM and the next system needing use of that metadata. This API becomes an important, if not essential, element for migrating one metadata set to the next.

A DOMINO EFFECT

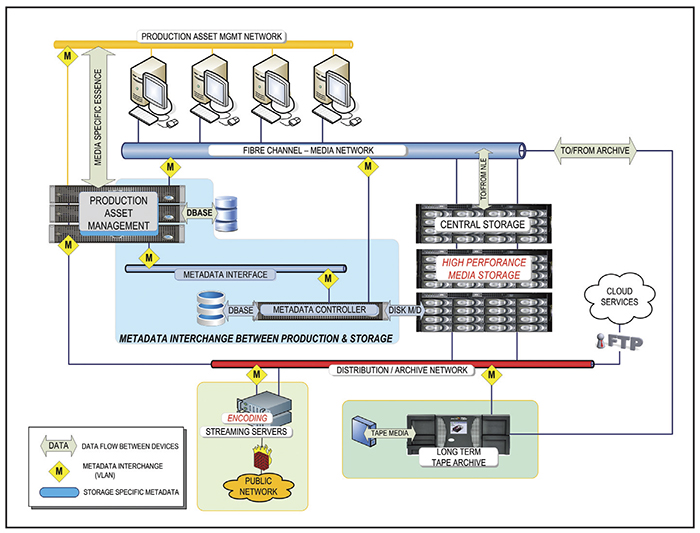

Fig. 1: Metadata interchange combines both production-specific metadata and system-level (storage) metadata. The fundamental ability to exchange and interpret each respective metadata set among the various elements throughout a system is essential to file-based workflows.

Fields from the originating PAM database must be mapped to fields in the new production database. Keeping those databases synchronized then becomes another choke point unless both systems are actively watching each other to see which system made a change that could, in turn, impact the next system downstream.

A domino effect happens when any of the metadata systems don’t align precisely with the next handoff point, creating a problem that grows exponentially.

That is just the tip of the iceberg from the metadata/database perspective. The next breakpoint becomes understanding or tracking “where is all that metadata going to be stored?” Surely we understand that content file sizes keep getting larger (a 1080i59.94, 50 Mbps XDCAM HD file is 4.4 GB), but those files generally number in the tens to hundreds (maybe a thousand or two), at least for the average single-user group.

Storing those large, contiguous files is managed fairly well by today’s media-aware central storage systems. However, the metadata files for this level of content, once the tagging (manually adding more information) and editing begins, will reach one or two orders of magnitude more in size. The dimensions of those file sets are relatively small, as a couple kilobytes to maybe a megabyte at most.

Manipulating (i.e., associating, retrieving, updating or re-assigning) these huge volumes of tiny files against the represented content becomes a monumental chore that imposes all sorts of risks should something get out of sync or fields get mismatched.

As a result, the metadata databases for all active content must be managed with a separate set of controllers (with independent applications) and kept on a separate storage system designed for high transaction rates—not unlike those employed by supermarkets that are linked directly into a corporate inventory management, pricing and order replenishment system. If you think about it, the supermarket model is actually much simpler than the media content management system; the latter tracks thousands of items with fixed universally available information, while the former tracks information that is extremely dynamic and is continually modified throughout the entire workflow.

THE ‘SECRET SAUCE’

That’s not the end of the metadata tracking scenario. We’ve just described the associative “content-related” metadata used in the production portions of the creative processes. In the background, literally working overtime, are the system metadata controllers; those that manage where all the files on the disk storage systems actually reside and how those chunks of data are mapped against file allocation tables, external metadata databases, replication and resiliency models (to protect the data) and a lot more.

These are usually proprietary subsystems that become the “secret sauce” of the overall big data management system for a specific storage vendor’s solution. This flavor of metadata controller is usually what a storage vendor will think most about. Physically, most storage vendors will build their metadata controllers as outboard server units that are linked to the data storage crates through a high-speed conduit that collects and distributes all threads of information between the drive chassis and the controllers.

With this broad perspective on the levels of storage types and what must be routinely and continually accomplished, we return to the opening inquiry: “Could a universal approach to storing all this data (on one virtual storage platform) make life easier?” The answer still appears to be a qualified “yes.”

As storage vendors recognize the need to scale up by dynamically adding resources (drive capacity, control points, metadata managers), the key becomes in finding a storage control platform that can address performance, capacity and multiple storage vendor solutions all on a harmonious single command-and-control structure.

The future of storage management depends on the nondisruptive migration to new technologies as improvements in all dimensions are introduced. Having to scrap an entire storage system because the controller can no longer address an increase in storage capacity is ludicrous. Placing limits on the storage system (the complete system including metadata controllers) forces users to manage their data as though it were on discrete LaCie 2TB portable hard drives.

While tiered storage solutions may help manage some of the immediate issues, in the long run users should pick a storage platform that allows them to virtualize for the future as opposed to addressing only their near-term needs.

Karl Paulsen, CPBE and SMPTE Fellow, is the CTO at Diversified Systems. Read more about other storage topics in his current book “Moving Media Storage Technologies”. Contact Karl atkpaulsen@divsystems.com.

Get the TV Tech Newsletter

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

Karl Paulsen recently retired as a CTO and has regularly contributed to TV Tech on topics related to media, networking, workflow, cloud and systemization for the media and entertainment industry. He is a SMPTE Fellow with more than 50 years of engineering and managerial experience in commercial TV and radio broadcasting. For over 25 years he has written on featured topics in TV Tech magazine—penning the magazine’s “Storage and Media Technologies” and “Cloudspotter’s Journal” columns.