When IP is not "that IP"

There is a world of difference between IP in managed and unmanaged networks, and it’s not just the IP stack that’s different. The business of on demand TV and video is probably more accurately described as monetizing content. There are really only three basic methods of monetizing content: subscriptions, pay-per-view and advertising. While these three methods may appear similar, there are additional elements and challenges that engineers may overlook when it comes to actual video delivery.

Requirements for successful video delivery

The first key delivery requirement that engineers must address is the prerequisite to maintain a certain quality of experience (QoE). Here, both subscribers and content producers expect a high degree of QoE, in part, because today’s display systems highlight every image impairment.

Video servers capable of using their video awareness and proximity to the client to create a virtual session enable the operator to report the actual quality of experience.

A second requirement is that usage data must be relayed back to an analytics system. Content owners want to know much more than just how many viewers were served. They want to know about the quality of those streams.

Let’s look more closely at the delivery architecture by breaking it down into three main layers: the monetization layer (analytics, transaction and billing systems, campaign and offer management), the management layer (managing and maintaining the flow of content through the system — packaging, workflow and content protection) and the delivery layer (actual transmission of content to the consumer).

Within cable and IPTV platforms, the production and collection of delivery data within the system are straightforward because all of the system components are directly under the control of the operator. However, with the transition from a managed IP environment to the public Internet (as in the case of OTT or multiscreen environments), there are some specific new challenges that must be addressed.

The "sessionless" issue

These new environments often rely on variable bit-rate delivery, one controlled by the client. Called HTTP-based adaptive bit rate (ABR) streaming, it is a process that adjusts the quality of delivered content based on changing network conditions with the goal of ensuring the best possible viewer experience.

HTTP-based adaptive streaming is a hybrid delivery method that resembles IP streaming but is based on an HTTP progressive download. The technology was first widely used in NBC’s production of the Summer 2008 Olympics in Beijing. Examples of ABR streaming include: IIS Smooth Streaming (used with Microsoft Silverlight), Apple Live Streaming and Adobe Flash Dynamic. Even though these technologies use different formats and encryption schemes, they all rely on HTTP. The media is downloaded as a long series of small progressive downloads, rather than one big progressive download.

In a typical adaptive streaming implementation, the content is chopped into many short segments, called chunks, and then encoded to the desired delivery format. Chunks are typically 2 seconds to 10 seconds long. The video codec creates each chunk by cutting at a GOP boundary, starting with a key frame. This allows each chunk to later be decoded independently of other chunks. In effect, this means that there is no direct “session,” unlike RTSP that is traditionally used in managed network environments such as IPTV or cable.

The encoded chunks are hosted on an HTTP Web server. A client requests the chunks from the Web server in a linear fashion and downloads them using a plain HTTP progressive download. As the chunks are downloaded to the client, the client plays back the sequence of chunks in linear order. Because the chunks are carefully encoded without any gaps or overlaps between them, the chunks play back as a seamless video.

The “adaptive” part of the system comes into play when the video/audio source is encoded at multiple bit rates, generating multiple chunks each at a different bit rate. (See Figure 1.)

Figure 1. Adaptive streaming requires the video be cut into small segments and then encoded into various bit rates prior to transmission.

In the figure, the top row represents the video, with different colors denoting various bit rates. This bit-rate schema is generally defined at the time of encoding, although there are some new technology advancements that are able to perform this on a more dynamic and distributed basis. Even so, these schemes do not alleviate this issue of monitoring and reporting.

Because Web servers usually deliver data as fast as network bandwidth allows them to, the client can estimate available bandwidth and decide to download larger or smaller chunks ahead of time.

These techniques add complexity to the delivery process because they require the video to be cached and transmitted as close to the viewer as possible. Now the number of components in each delivered stream becomes fluid rather than fixed.

With this basic understanding, a potential problem becomes fairly clear. If there is no real session associated with the communication, and the operator has deployed distributed network caches to maximize bandwidth usage, how does the operator report the QoE of a specific session or even the quality of video that has been delivered to a specific client over a certain time period?

Possible solutions

There are at least two potential answers to this issue. First, implement a log-based monitoring system that pulls the log files from all possible locations, especially from the video servers, and attempt to build an effective correlation between individual files being transmitted and the client that is viewing those files. This method is attractive because it is fairly simple but does require an enormous amount of server horsepower for the analysis and correlation. In the most simplistic of examples, a 90-minute soccer match encoded at five different bit rates would create 13,500 files.

The second solution relies on the edge servers to play an active role in aggregating the information. The main requirement in this system is that the video servers be capable of using their video awareness and proximity to the client to create a virtual session. This enables the operator to report the actual QoE, or at least the bit rate being consumed by the client, in a way that is meaningful to the content provider.

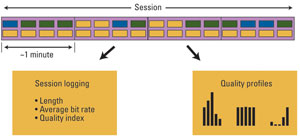

Once a session concept has been introduced, it is then possible to report usage data tied to sessions and content, instead of fragments. Parameters of interest could include session length, average bit rate and engagement. (See Figure 2.)

Figure 2. Content owners often require session reports that include specific quality profiles. This requires CDNs to not only measure certain parameters like bit rate, but tie the data to entire programs and customer QoE, not just data fragments.

Engagement is typically measured as session length versus asset length, i.e., it shows how much of the content that was actually watched. This type of measurement requires the system to understand what an asset is and that it has a certain length. Just knowing what fragments that have been delivered is not sufficient.

As viewership on tablets, OTT and even mobile devices increases, ABR delivery will become even more important. Engineers will need to better understand how to deploy distributed systems that embrace cost-effective video delivery while preserving the all-important QoE and data reporting. Whether being implemented as an operator CDN or an extension of the existing IPTV infrastructure, monetization, QoE and data reporting are likely to remain key areas of focus over the next few years.

—Duncan Potter is chief marketing officer and vice president of operations, Edgeware.

Get the TV Tech Newsletter

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.